Physical components are becoming more pervasive and are often equipped with sensors and actuators that allow them to interact with their environment and with each other in what is called the Internet of things (IoT). Systems mixing this kind of components are called cyber-physical systems (CPS). CPS are the key element of modern industrial environments, known as Industry 4.0, which are becoming widespread in several sectors, enabling smart cities, smart homes, and smart transportation.

These physical components communicate with each other through the set of services they expose. These services are typically asynchronous — e.g., a service may continuously provide context information obtained from the physical sensors of the underlying component. All these asynchronous communications have led to an architectural style known as event-driven service-oriented architecture. In these architectures, the constituent services communicate through the notification of events following the publish-subscribe pattern: a service producer publishes some event into a service broker, which sends it to all the service consumers subscribed to that type of events. In this manner, the interaction between producers and consumers is highly decoupled, which facilitates its scalability, reduces dependencies, and improves fault tolerance.

Event-driven service-oriented architectures have surged in popularity because of its adequacy in the context of CPS. According to a 2021 industry survey conducted by Solace, 85% of organizations recognize the business value and are striving for event-driven SOA architectures. However, a subsequent survey conducted by the same organization in 2022 found that the biggest technical challenge for adopting this architecture is its integration with existing applications.

Several initiatives have emerged to facilitate the integration of this type of IoT services. For instance, the Web of Things (WoT) proposal aims to reuse and extend standard web technologies to apply them to the IoT domain. In the same direction, specifications like AsyncAPI—which is independent of the underlying technology and protocol, and as such, can describe services using web-based technologies like WebSocket—have emerged to provide machine-readable definitions of APIs of asynchronous services.

Although these initiatives provide a common framework that enables the definition, discoverability, and interoperability of IoT-based services, they do not provide standards to define the quality of service (QoS) that is expected or required from them. For example, latency, availability, or throughput are key QoS metrics that need to be guaranteed in order to ensure a proper operation of the system. Although several approaches have been proposed to define and specify the QoS in the fields of WoT and IoT, they are limited in the number and type of metrics they address. Moreover, the required QoS is usually formalized in the form of a service level agreement (SLA). SLAs are contracts that establish the QoS agreed between service providers and service consumers. Several formal machine-readable languages have been proposed for specifying SLAs. The primary objective behind making them machine-readable is so that they can be processed by a computer and, for instance, automatically derive and instantiate monitors to measure the compliance of the conditions stablished in the SLA. Although some proposals for SLAs for WoT and IoT exists, they have some limitations in their expressivity and lack alignment with current standards, such as WS-Agreement or the AsyncAPI specification.

To cover this gap, our paper titled “AsyncSLA: Towards a Service Level Agreement for Asynchronous Services” by Marc Oriol, Abel Gómez, and Jordi Cabot and that will be presented at SAC2024, proposes:

- a quality model that comprises and structures several quality dimensions for asynchronous services based on the ISO/IEC 25010 standard

- a domain specific language (DSL) to specify SLAs for asynchronous services considering these dimensions, adapting the WS-Agreement standard and integrating it with the AsyncAPI specification

- a tool that supports the proposed language and quality model.

We argue that these contributions are a first step towards supporting automatic techniques that rely on machine-readable QoS in an IoT or asynchronous context, such as the automatic generation of monitors, SLA assessment, or the discoverability of WoT based on QoS. You can read the full paper here or keep reading for an extended summary

Quality of service for Asynchronous services

The first question when proposing a QoS approach for asynchronous systems is: what are the quality dimensions we should be concerned about and what metrics can be used to measure them? For instance, time performance may be evaluated with the response time in synchronous services (i.e., the time it takes for the service client to receive the response since the request was sent). However, in the field of asynchronous services, the asynchronous interaction of the services requires other metrics to measure performance (e.g., the time it takes from the packet generation by the source node to the packet reception by the destination node).

In this work, we propose a quality model for asynchronous services aligned with the ISO/IEC 25010 standard. To build this quality model, we gathered a variety of existing quality metrics and their definitions as proposed in the literature, both from services in general (i.e. they apply to both synchronous and asynchronous services) and asynchronous services in particular.

| Quality Metric | Parent from ISO/IEC 25010 | Definition |

| Probability of correctness | Functional suitability (correctness) | The probability that the content of the message accurately represents the corresponding real world situation. |

| Precision | Functional suitability (correctness) | How exactly the provided information in the message mirrors the reality. Precision is specified with bounds. |

| Up-to-dateness | Functional suitability (correctness) | How old is the information upon its receipt. |

| Latency | Performance (time behaviour) | The total time from the packet generation by the source node to the packet reception by the destination node. |

| Round Trip Time | Performance (time behaviour) | The time from the packet generation by the source node to the reception of the ACK. |

| Jitter | Performance (time behaviour) | Variation of Latency. |

| Throughput | Performance (time behaviour) | The amount of error-free bits transmitted in a given period of time. |

| Message frequency | Performance (time behaviour) | The amount of messages transmitted in a given period of time. It is the inverse of Time period. |

| Time period | Performance (time behaviour) | The amount of time elapsed between two messages. It is the inverse of message frequency. |

| Memory used | Performance (resource utilization) | The amount of memory used by the service. |

| CPU used | Performance (resource utilization) | The amount of CPU used by the service. |

| Bandwith | Performance (resource utilization) | The amount of bandwith used by the service. |

| Payload size | Performance (resource utilization) | The size of the payload enclosed in the message sent by the service. |

| Max. subscribers | Performance (capacity) | The maximum amount of subscribers the service is capable of handling. |

| Max. throughput | Performance (capacity) | The maximum throughput the service is capable of providing. |

| Load balancing | Performance (capacity) | To what extent the service has load balancing capabilities. |

| CPU capacity | Performance (capacity) | The maximum CPU the service is capable of using. |

| Memory Capacity | Performance (capacity) | The maximum memory the service is capable of using. |

| Documentation | Usability (recognizability) | The extent to which a web service interface contains an adequate level of documentation. |

| Discoverability | Usability (recognizability) | The ease and accuracy of consumers’ search for an individual service, based on the description of the service. |

| Expected failures | Reliability (maturity) | Expected number of failures over a time interval. |

| Type Consistency | Reliability (maturity) | The data flow is consistent with respect to definition of data types. |

| Time to Fail | Reliability (maturity) | Time the service runs before failing. |

| Availability | Reliability (availability) | The probability that a service is available at any given time. |

| Uptime | Reliability (availability) | The duration for which the service has been operational continuously without failure. |

| Packet loss | Reliability (availability) | Ratio of the number of undelivered packets to sent ones. |

| Disaster resiliance | Reliability (fault tolerance) | How well the service resists natural and human-made disasters. |

| Exception Handling | Reliability (fault tolerance) | Internal activities that are performed in the case of failures during the execution of the service. |

| Time to Repair | Reliability (recoverability) | Time needed to repair and restore service after a failure. |

| Failover | Reliability (recoverability) | Whether the service employs failover resources, and how quickly. |

| Versioning | Maintainability (Modifiability) | Whether the service implements versioning strategies to ensure backward compatibility. |

A Domain-Specific Language for SLAs of Asynchronous Services

We also propose a domain specific language (DSL) to define SLAs for asynchronous services, named AsyncSLA, by tailoring the structure and key concepts of the WS-Agreement standard.

In addition to addressing the quality dimensions for asynchronous services outlined previously, we have also specified our DSL to support the inherent characteristics of asynchronous services, that are distinct from the synchronous ones. These characteristics are:

- Interactions are initiated by the service provider: In contrast to synchronous services where the service client initiates the interaction—i.e., the service client sends a request to the service provider to obtain a response—in asynchronous services, it is the service producer who initiates the interaction—i.e., the service producer sends the information related to an event to the subscribed service consumers.

- One-way interaction with service consumers: Although the service consumer can acknowledge the reception of the information, there is no response that the service producer expects from its subscribed consumers.

- Channel-based communication: Instead of service methods or operations that are offered by synchronous services, asynchronous services are composed of channels, where the service producer publishes the information related to an event so that the service consumers subscribed to that channel can receive it.

- Broadcast communication: When an asynchronous service publishes a message, this is received by all the subscribed service consumers in the channel, leading to a 1–N relationship for a given event (i.e. one event has one service producer and N service consumers receiving it).

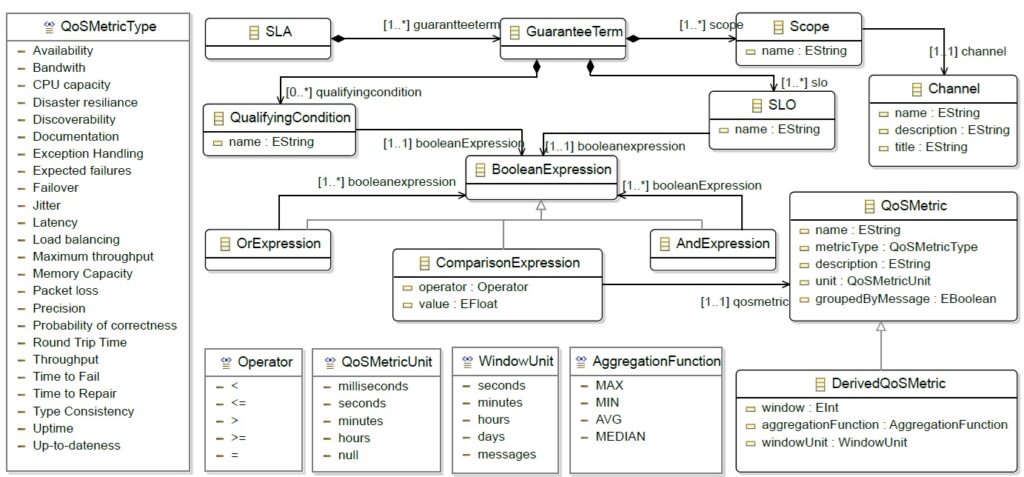

In our DSL, SLA is the top-level class for the definition of an SLA, and it is composed of several GuaranteeTerms. A GuaranteeTerm is a WS-Agreement element that defines the terms that the service must meet regarding the QoS. It includes a set of scopes, a set of qualifying conditions and a set of service level objectives (SLO). While these components are inherent to the WS-Agreement standard, we’ve tailored them to suit asynchronous services. The Scope defines the scope where the GuaranteeTerm applies to. In our DSL, it includes an identifying name, and relates to the service Channel that is used as a scope—which is akin to the service methods in synchronous services. This granularity enables tailored QoS conditions for different service channels within the asynchronous service. For instance, one channel providing information related to critical events may impose stricter QoS conditions compared to another less critical channel. The QualifyingCondition defines the preconditions, expressed as a boolean expression (BooleanExpression), that must be met for a condition to be enforced. In its turn, the SLO defines the QoS condition that must be assessed—when the QualiyingCondition is met. An SLO is specified as a boolean expression of the condition that must be assessed for the goal to be fulfilled.

In our DSL, boolean expressions support the combination of OrExpressions and AndExpressions in a tree-structure form, leading to one or more ComparisonExpressions. A ComparisonExpression is an expression composed of a QoSMetric, an Operator, and a value. Based on our findings in different SLAs, we opted for a lightweight but powerful enough grammar to define boolean expressions, ratherthan using a more complex but cumbersome language—e.g., OCL.

QoSMetric defines the metric involved in the SLA/SLO evaluation. It has an identifying name, a description, a QoSMetricType, a QoSMetricUnit, and a groupedByMessage flag. The attribute QoSMetricType is used to link the defined metric to the type of metrics from the quality model. In this way, the SLA can be seamlessly integrated with any predefined quality model, allowing flexibility in linking the defined metric to specific metric types through the QoSMetricType attribute. The groupedByMessage flag determines whether the QoSMetric is calculated as the average result for the metric across all clients subscribed to a specific event when set to ’true,’ or if distinct QoS metrics are calculated for each individual client when set to ’false.’ For instance, if two clients are subscribed to the same channel and this attribute is set to ’false,’ two values (one per client) would be produced. Conversely, if it’s set to ’true,’ a single value (the average of both clients’ metrics) would be generated. Finally, DerivedQoSMetric defines a derived QoS metric, i.e., the result of aggregating values—e.g., average, max, min—in an interval.

A Tool to specify SLAs for asynchronous services

To show the feasibility of our approach, we have implemented a tool prototype that supports the definition of SLAs for asynchronous services using our AsyncSLA proposal.

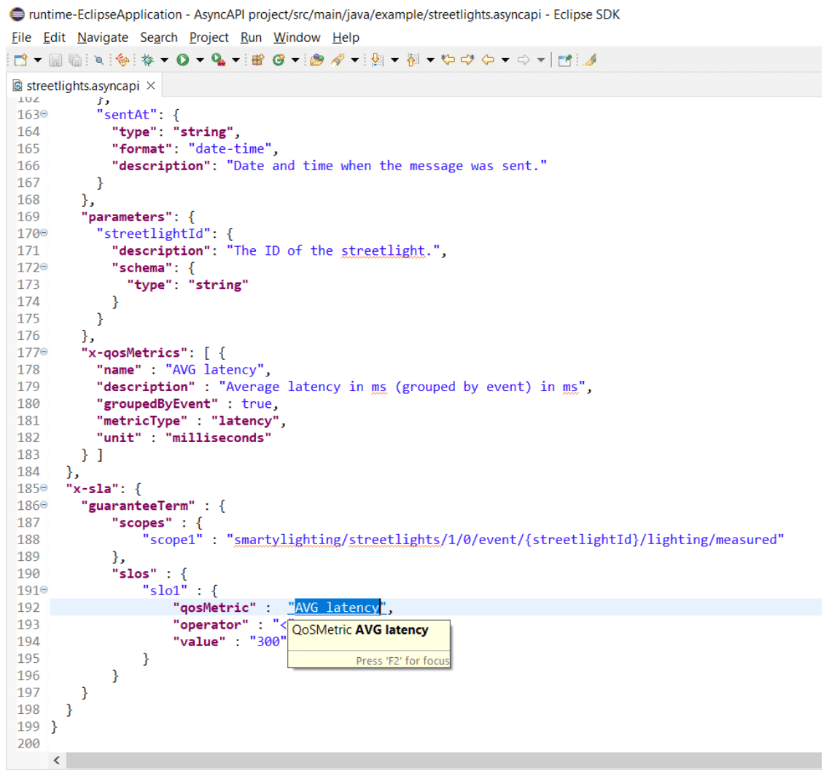

To implement this tool, we have first defined a concrete syntax matching the metamodel described previously. In particular, the concrete syntax is defined as an extension of the AsyncAPI specification. AsyncAPI is an initiative that, similar to what OpenAPI represents for the REST world, aims at providing a single source of truth in the definition of asynchronous and message-based services. As part of our previous work, we developed the AsyncAPI Toolkit, an Eclipse-based tool that supports the development of AsyncAPI specifications and automatically generate code using model-driven engineering techniques. The AsyncAPI Toolkit defines both the concrete and abstract syntax for AsyncAPI specifications using Xtext.

We have now extended the AsyncAPI Toolkit to seamlessly integrate both AsyncAPI and the AsyncSLA specifications. This integration starts at the metamodel level, where we have linked the AsyncAPI metamodel with the AsyncSLA metamodel presented previously. In the integration, we have fused the common elements such as Channel, and we have added the SLA object as a field of the root AsyncAPI object. We have also defined a concrete syntax for the SLA-related elements—i.e., those that do not already belong to the AsyncAPI specification—in JSON. We indicate that such additions do not conform the official AsyncAPI specification by prefixing them with an x-.

The extended AsyncAPI Toolkit provides a content-assisted editor and parser to define SLAs along with the AsyncAPI specification. Ready to give it a try??

Recent Comments