Generative artificial intelligence (AI) systems are capable of synthesizing complex content such as text, source code or images according to the instructions described in a natural language prompt. The quality of the output depends on crafting a suitable prompt. This has given rise to prompt engineering, the process of designing natural language prompts to best take advantage of the capabilities of generative AI systems.

Through experimentation, the creative and research communities have created guidelines and strategies for creating good prompts. However, even for the same task, these best practices vary depending on the particular system receiving the prompt. Moreover, some systems offer additional features using a custom platform-specific syntax, e.g., assigning a degree of relevance to specific concepts within the prompt.

We propose applying model-driven engineering to support the prompt engineering process. Using a domain-specific language (DSL), we define platform-independent prompts that can later be adapted to provide good quality outputs in a target AI system. The DSL also facilitates managing prompts by providing mechanisms for prompt versioning and prompt chaining. Tool support is available thanks to a Langium-based Visual Studio Code plugin.

This work will be presented at the upcoming Models’23. Meanwhile, you can read the full paper or the extended summary below.

Contents

Challenges of Prompt Engineering

A challenge of prompt engineering is the fact that it is essen-tially a platform-specific process. Prompts that perform well in a particular system may under-perform in other systems or even in different versions of the same system. For instance, the release of version 2 of the Stable Diffusion open-source text-to-image system caused user complaints that prompts previously considered as “good” were producing worse results. Two changes motivating the performance gap were different training datasets and the replacement of a component of the AI system (the encoder). These types of changes are expected each time a new version is released.

Moreover, some advanced aspects of prompting such as negative information, assigning weights to prompt fragments or setting platform-specific flags have a different degree of support and use a different syntax in each generative AI system.

Benefits of Model-Driven Engineering to Prompt Engineering

To address these challenges, we propose to apply model-driven engineering (MDE) principles and techniques to prompt engineering. Our goals are:

- Facilitating the definition of good prompts for different generative AI systems;

- Facilitating the migration of a prompt from one platform to another without losing effectiveness; and

- Enabling knowledge management of prompt-related tasks (such as documentation, traceability or versioning). In particular, we offer the following contributions:

Beyond these core benefits, we believe MDE can also bring additional benefits such as:

- Platform selection: When prompts are specified in a platform-independent way, it is possible to implement strate-gies for selecting the most suitable generative AI system for a given prompt automatically. The criteria used in this decision may include:

- Cost of the AI service.

- Length of the prompt (for services with a prompt limit).

- Support for constructs used in the prompt (e.g., Midjourney does not currently support combination prompts).

- Performance information of different generative AI systems for a particular type of task.

- Available interfaces of the AI service (e.g., API or graphical user interface).

- Code generation and run-time management: In addition to generating textual prompts, it is possible to generate the code that invokes the generative AI system to capture the response. It is also possible to keep a log of the outputs of generative AI systems, recording the prompts, parameters and outputs. This can be used to keep track of the quality of a given prompt over time.

- Prompt optimization: it would be possible to integrate these optimizations in the prompt generation process.

- Platform comparison: Different generative AI systems may produce outputs of different quality for the same prompt. It may be interesting to generate and process prompts in different platforms.

- Prompt quality and security analysis: In addition to generating code, it would be possible to generate validation artifacts, such as prompt-driven tests, to automatically assess whether the output of a generative AI tool for a given prompt fulfills the input specification. For prompts with parameters, an area of particular interest is checking whether the prompt is susceptible to prompt injection.

- Multi-lingual prompt generation: We can generate the platform-specific prompts in languages other than English. This will become important as we see a trend towards releasing localized generative AI systems trained on other languages.

Why a DSL for prompt engineering?

The key element of all the above benefits is Impromptu, a domain-specific language (DSL) for defining prompts in a platform-independent way. The DSL supports modular prompt definition and can describe different tasks (such as text-to-text or text-to-image) as well as multi-modal inputs. But, you may wonder:

Why is it necessary to use a Domain-Specific Language for Prompt Engineering when you could just write it with natural language? Click To TweetSeveral reasons motivate this decision:

- Prompt templates: For the sake of generality, it may be desirable to define a prompt as a template, where fragments of the prompt are parameters to be instantiated in each particular call with a different piece of information or command.

- Hyperparameters: The call to a generative AI system requires setting suitable values for hyperparameters. It is useful to record information about suitable values for these hyperparameters together with the prompt.

- Multi-modal inputs: Recent generative AI systems support additional inputs beyond textual prompts, such as images. It is necessary to describe these additional inputs and their role in the definition of the task.

- Modularity: A company may desire that several prompts reuse a set of instructions to specify the tone, mood or target audience of the output, specify fallback actions or specify certain limitations on the output. Rather than manually replicating and appending these snippets each time, it would be desirable to define these snippets independently and import them where it is necessary.

- Chaining: Complex tasks may not be solvable by a single prompt. In this scenario, it is necessary to indicate the relationship between different prompts in a chain, e.g., when a given prompt uses the result from a previous one.

- Documentation and meta-data: A complex prompt is the result of a careful design and extensive experimentation. Nevertheless, this knowledge is lost when we view a prompt simply a string of text. It is necessary to keep track of relevant meta-data, such as its author, versions, and comments.

- Cost-effectiveness: Impromptu is targeted towards complex prompts that aim to be re-used. We are considering the prompts that empower an intelligent application or service relying on a generative AI system as its back-end. These are the types of prompts where it makes sense to optimize the effectiveness, minimize cost and enable platform-independence.

- Flexibility: Natural language is capable of describing any type of task or scenario. Instead, a DSL introduces a structure which may limit the freedom provided by natural language. However, a DSL is better suited to expressing concepts and adapting them to different platforms.

Impromptu – Our Prompt DSL

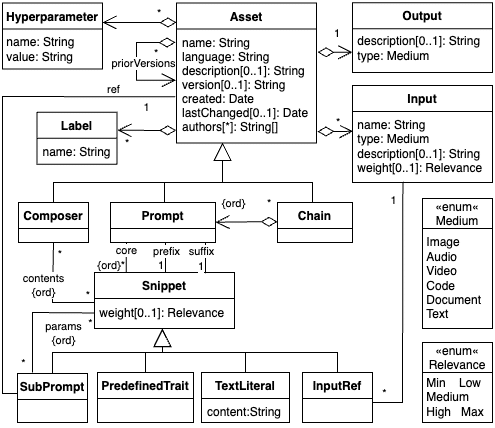

The following figures show the metamodel for the prompt DSL. With them you can describe all core prompt engineering concepts. Among them the key ones are:

- Assets: The central notion, which can be either prompt, a composer or a prompt chain. Assets can be annotated with useful information such as language, description and authors. To keep track of different versions of an asset, it is possible to provide a version name, date of creation and last update and identify prior versions. Each asset has as a single output that can be of a different type, e.g., text or image. Moreover, assets may have several inputs.

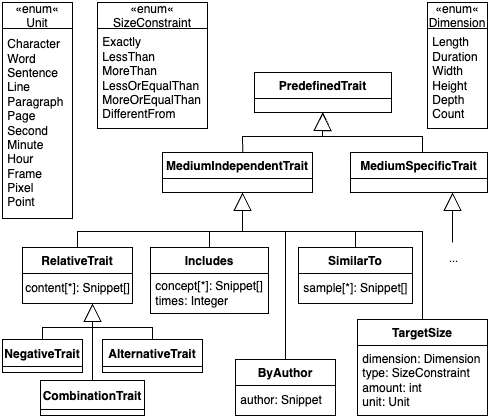

- Prompts: Prompts are defined as a sequence of snippets. One snippet is identified as the prefix (suffix) and is prepended (appended) to the rest of the prompt, while the rest form the core of the prompt. A snippet can also include a property about the output (called trait) chosen from a predefined catalog. Figure 2 presents the traits that are independent of the format of the prompt’s output. Some sample traits are including certain elements, emulating the style of a given author or satisfying specific size constraints. There are also format-dependent traits. Each component of a prompt, such as snippets or inputs, can be given a weight that indicates its relevance.

- Composers: A composer is a utility that concatenates several snippets without performing any additional processing. This is useful to collate the output of different text-to-text prompts into a single string.

- Prompt chains: A sequence of prompts that computes a result step by step. Each step corresponds to the execution of an asset.

We have also defined a (textual) concrete syntax for Impromptu. The complete grammar is available in our GitHub repository. But just to give you a taste of it, we show how to define a prompt to be reused as a suffix for several prompts. This prompt provides instructions regarding the tone and difficulty of the questions for student exams. It includes a single parameter: the level of competency in English of the questions.

1 2 3 4 5 6 7 8 | composer Exam.DetailedInstructions (@level "English level") "Questions should be clear and avoid controversial topics such as politics or religion.", "The difficulty should be adequate for students with an English level of ", @level, "." |

Next, we write a prompt to generate the grammar question

1 2 3 4 5 6 7 | prompt Exam.GrammarQuestions (@level "English level"): text core = "Propose 5 grammar questions for an English exam", "Use British English at all times" weight high suffix = Exam.DetailedInstructions(@level) |

Again, refer to the paper or the repo (both linked above) for more complex examples showing all the power and expressiveness of the DSL.

Implementation

As a prototype, we have implemented an editor for the Impromptu DSL as a Visual Studio Code extension.The implementation is written in TypeScript and is based on Langium, an open-source language engineering tool which supports the Language Server Protocol. Thanks to Langium, the editor offers built-in syntax-highlighting, syntax checking and autocomplete suggestions. These suggestions are a useful way to help a user create a prompt, e.g., by suggesting a list of candidate predefined traits.

In addition to a graphical user interface (GUI), Impromptu also offers a simple command-line interface (CLI) that makes it possible to generate tool-specific prompts within automated workflows.

What’s next?

This is just one of our steps towards the challenge of modeling smart software systems (e.g. see also how we plan to test the ethical aspects of these type of systems).

On our side, we will start extending and adapting the DSL and associated toolset to make all the MDE promises for Prompt Engineering a reality. And you? What is your prompt DSL wishlist?

Robert Clarisó is an associate professor at the Open University of Catalonia (UOC). His research interests consist in the use of formal methods in software engineering and the development of tools and environments for e-learning.

Recent Comments