No programmer wants to spend hours aligning HTML elements or playing with complex CSSs. This is why any low-code tool will generate the User Interface code of your application for you. The problem is that they generate rather basic interfaces, mostly oriented to typical data-entry forms and grids. Anything more complex than that and you are back to tuning the CSS by hand.

On the opposite spectrum, we have a variety of visual mockup/wireframe tools to quickly build a prototype of your desired graphical interface. But those don’t include any kind of code-generation capabilities. Therefore, you enter a vicious cycle where “the designer prepares an app design, hands it off to the developer who manually codes each screen. This is where the back and forth starts (colors, responsive layouts, buttons, animations…). In some cases, this process can take weeks or months” (taken from here).

To fix this situation, a few design-to-code tools started to appear. For instance, Supernova takes Sketch designs and transforms them into ready-to-use native UI code. Yotako also aims to help you in a smooth transition from design to code, being able to import a variety of design formats. Anima, with its 10M USD in funding, is for sure one of the big players in this area.

But, as in many other fields, the arrival of the new AI era has boosted the number and functionality of these tools. The promise of these tools is that they can use as the input of the UI code generation process screenshots and even handmade sketches. Thanks to the use of deep learning technologies to recognize the interface elements in the images, they’re able to grasp the desired UI and generate the code that will mimic that design (either by going from the rough sketch to a “formal” wireframe or by directly generating the code). Let’s see some of these tools / approaches.

I first covered these tools a couple of years ago. What it seemed to be the start of an explosion of AI-based design and generation tools never really happened. If anything the field has stalled. I am not sure if it is because many of those tools realized there is a huge gap between a cool AI demo and a real AI product. Technically speaking, there are more and more types of AI models that could be useful in this context (e.g. DALL·E could help in creating some design elements from textual descriptions) getting to an actual usable tool (and a financially viable one) is an entirely different discussion.

A couple of the initial tools in the list have died or focused on the design part (kind of dropping the AI emphasis). And while we have been able to include a few more tools, they are all at early stages, so still waiting to see if any of them is going to revolutionize the development of user interfaces in a near future. Let’s see our selection so far.

Teleporthq

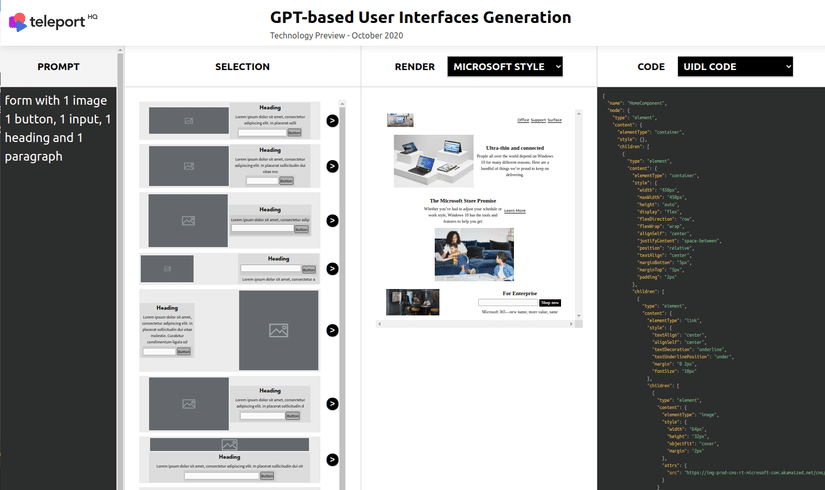

Teleport is a code-generator for front-ends. You can visually create your design with drag-and-drop components and then automatically generate the corresponding HTML,CSS and JS code from it.

As described in this post, they are experimenting with GPT-based user interface generator, able to create the designs from textual descriptions, among other innovations. But these innovations are not yet part of the main tool.

Uizard

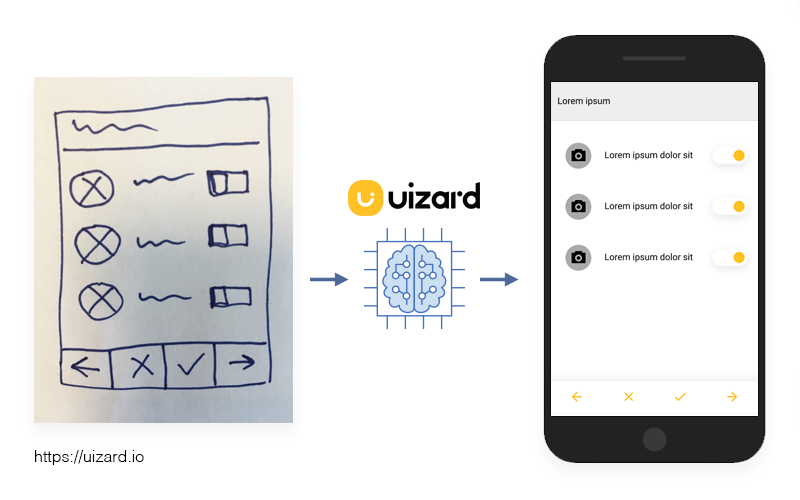

Uizard’s vision is to improve the designer/developer workflow that too often results in more frustration than innovation. They raised 2.8M in seed funding to quickly turn app sketches into prototypes. Even if there are plenty of tools to specify the mockups of a new product, more often than not, any meeting ends up with a few quick hand-sketches. Their goal is to turn them into prototypes in seconds. They used to have a public version of their Sketch2code solution is pix2code that doesn’t seem to be maintained anymore.

Despite this initial goal, they seem to currently target more designers and market themselves a “no design platform” (kind of what “no-code” is to developers) by, for instance, automatically turning sketches into beautiful designs.

They keep doing interesting research on the application of AI/NLP/DL to design though this part still seems to be more on the experimental side and not so much a core component of the product.

AirBnB Sketching Interfaces

This experiment from AirBnB aimed mainly at detecting visual components on rough sketches. Their point was that “if machine learning algorithms can classify a complex set of thousands of handwritten symbols with a high degree of accuracy, then we should be able to classify the 150 components within our system and teach a machine to recognize them”. So, as you can see in the video below, they trained the system with the set of components they typically use in AirBnB interfaces and managed to get a high degree of recognition.

Unfortunately, it looks this was mainly and exploratory experiment. Even the main person behind it has moved to another company, so not sure what the future plans for this is.

Screenshot to Code

This open-source project reuses some ML above (especially pix2code) to create an excellent tutorial you can use to learn (and play) with all the ML concepts and models you need to get your hands into this mockup to UI field.

Microsoft’s Sketch2Code

Sketch2Code is a Microsoft approach that uses AI to transform a handwritten user interface design from a picture to valid HTML markup code. Sketch2code involves three main “comprehension” phases.

- A custom vision model predicts what HTML elements are present in the image and their location.

- A handwritten text recognition service reads the text inside the predicted elements.

- A layout algorithm uses the spatial information from all the bounding boxes of the predicted elements to generate a grid structure that accommodates all.

The result as you can see is quite impressive.

Ink to code was an earlier and much more experimental attempt.

Zecoda

Zecoda is still more of a tool announcement than a real tool. But I’m adding it here because I like how they acknowledge the limitations of AI and explain that, even if an AI service will be in charge of generating the code, such code will be reviewed by a human developer.

Viz2<code>

Viz2<code> is was in beta. It looked very promising but it seems dead now. You could use as input a “standard” wireframe design or handmade sketches and export them to Sketch files or a ready to launch website. If the latter, they also promised to generate for you some useful code that helps you integrate that front-end with your data and backend.

They used deep neural networks to recognize and characterize components when scanning the design. Then they tried to infer the role and expected behavior of each recognized component to generate a more “semantic” code.

Viz2<code> is the tool featured in the top image illustrating this post.

Generating interface models and not just code

I would like to see some of these tools generating not the actual code but the models of that UI so that it would be possible to integrate those models with the rest of the software models within a low-code tool. This way, we could easily link and integrate with UI components with the rest of the system data and functionality and generate at once a complete implementation.

FNR Pearl Chair. Head of the Software Engineering RDI Unit at LIST. Affiliate Professor at University of Luxembourg. More about me.

For prototypes or to use the more popular term for MVPs it is even possible to generate the UI out of the data model. We did it back in 2003. We got the customer to specify the data to be presented/edited as XML Schema Defintions (XSDs). We then enriched the XSD with additional information like read-only/read-write, etc. and applied a XML StyleSheet Transformation (XSLT) to generate Java (Swing) code out of it. It worked really well and paid out, hence we had to do 300+ forms 🙂

BTW, after refining the XSLT the generated forms got productive. Even better the customer really loved them, because of uniformity. All forms had the same look&feel.

/Carsten

I agree. Most of forms are trivial CRUDs so they could be automatically generated In fact this was the focus of my old and completely failed attempt to build a generator: https://modeling-languages.com/umltosql-umltosymfonyphp-and-umltodjangopython-are-now-open-source/

Hi Jorge

I finally found the slide set a was looking for, here it is

http://www.disca.upv.es/jorge/ae2010/slides/IP1-2_Wedin_MDA_and_SPARK.pdf

I has been posted by yourself 😉

The last figure I was told by Saab Bofors Dynamics AB engineers, that Saab Bofors Dynamics AB generates 97.8% of the source code out of models.

It’s the mind set, that limits.

/Carsten

s/I has been posted by yourself/I guess the slide set has been posted by yourself/

Interesting stat (but no, I didn’t post those slides)

Interesting work. But while

> 20% of the modeling effort suffices to generate 80% of the application code

is in principle perfectlycorrect, one also have to face the fact

complexity is system inherent

That means you complexity remains the same whatever syntax you use.

Another aspect is mind-set or to be more specific, the syntax favored by developers. Developers are no modelers. Developers prefer to work with a text based syntax. That is why annotation

@Min(value = 18, message = “Age should not be less than 18”)

@Max(value = 150, message = “Age should not be greater than 150”)

private int age;

is so extremely popular among developers. The abstraction level is as high as with UML models created to generate source code. The transformation mechanisms used are also the same. The Java annotation processing tool (apt) performs pre-compile- time transformations as an ATL or a MOFM2T based transformation does. So your research work was not in vain.

As a result, transformation has become extremely popular 😉

/Carsten