This week I was in a PhD thesis defense where they had done an intensive use of ATL for writing non-trivial model transformations. So, when I was my turn to make the candidate sweat a little, one of the questions I asked him was “Based on this experience, what changes to ATL (either the language or the IDE) would you propose? ”

His answer was “None”. I’m really glad ATL worked so well for him and his particular application scenario but I’m sure this is not the case for all ATL users. So, I’d like to ask you here: If you had the chance, what would you modify about the way ATL works, the language syntax or the ATL tools?. And if you want to be more generic you can also give your opinion on the problems you think model transformation languages suffer from (but do not transform this into a language war, this is not the point).

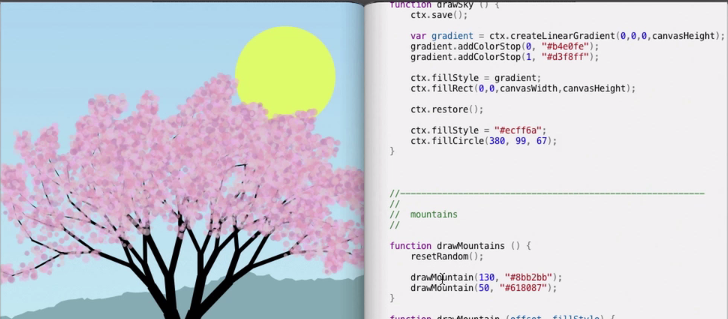

I’ll start with one example myself. I’d like to have a live coding environment for ATL where users could immediately see the effect of the changes they do on the transformation as they perform them. Imagine adding a new rule and have an immediate representation of how this rule changes the behaviour of the transformation.

For when a live coding environment for model transformations? Click To TweetNot sure what I mean? Bret Victor explains it much better: read it or, better, just watch it below.

Bret Victor – Inventing on Principle from CUSEC on Vimeo.

Now, your turn!

FNR Pearl Chair. Head of the Software Engineering RDI Unit at LIST. Affiliate Professor at University of Luxembourg. More about me.

Hi there! Well after some thoughts about your question I have one proposal of improvements :). I remember it was quite long to compute the .asm file especially with the UML4TST2BZP, which contains a lot of helpers to concatenate strings and lot of rules that write strings (yes ok I used ATL as a kind of code generator for this transformation :P), but as I explained to you, it was for “pre-treatments”. I mean, I found some features of the ATL language easier to use compared to Acceleo, especially when the target meta-model (BZP) is supposed to be like an abstract syntax tree but is not…(and even more easier to generate OCL4MBT code from its meta-model, which was a kind of abstract syntax tree: everything with helpers its just magic!! ). In the case of the UML4TST2BZP, there were a lot of strings to compute and a lot of these awesome function: thisModule.resolveTemp(var1, var2). In addition, every rule generates at least 8 to 10 elements of the target MM (see the example below). But anyway, I just noticed that it took something like 30 to 40 seconds to compute the .asm file. Well, maybe my computer is a little tired to :).

Example of rule :

rule SimpleStateWithExternalGuardedOrEventTransitiont2BZP{

from uml4tst: MMuml4tst!Vertex(

[…]

)

to bzp_context: MMbzp!Context(

),

bzp_declaration_status: MMbzp!Declaration(

),

bzp_predicat_status_invariant: MMbzp!Predicat(

),

bzp_predicat_status_initialisation: MMbzp!Predicat(

),

bzp_operation_entry: MMbzp!Operation(

),

bzp_predicat_post_entry: MMbzp!Predicat(

),

bzp_operation_exit: MMbzp!Operation(

),

bzp_predicat_pre_exit: MMbzp!Predicat(

),

bzp_predicat_post_exit: MMbzp!Predicat(

),

bzp_event: MMbzp!Event(

),

bzp_predicat_ficticious_1: MMbzp!Predicat(

),

bzp_predicat_ficticious_2: MMbzp!Predicat(

),

bzp_set: MMbzp!Sett(

),

bzp_pair: MMbzp!Pair(

),

bzp_atom: MMbzp!Atom(

)

do {

thisModule.id_decl <- thisModule.id_decl + 1;

bzp_declaration_status.id <- 'id_'+thisModule.id_decl.toString();

thisModule.id_decl <- thisModule.id_decl + 1;

bzp_predicat_status_invariant.id <- 'id_'+thisModule.id_decl.toString();

thisModule.id_decl <- thisModule.id_decl + 1;

bzp_predicat_status_initialisation.id <- 'id_'+thisModule.id_decl.toString();

thisModule.id_decl <- thisModule.id_decl + 1;

bzp_operation_entry.id <- 'id_'+thisModule.id_decl.toString();

thisModule.id <- thisModule.id + 1;

bzp_predicat_post_entry.id <- 'id_' + thisModule.id.toString();

thisModule.id_decl <- thisModule.id_decl + 1;

bzp_operation_exit.id <- 'id_'+thisModule.id_decl.toString();

thisModule.id <- thisModule.id + 1;

bzp_predicat_pre_exit.id <- 'id_' + thisModule.id.toString();

thisModule.id <- thisModule.id + 1;

bzp_predicat_post_exit.id <- 'id_' + thisModule.id.toString();

thisModule.id <- thisModule.id + 1;

bzp_event.id <- 'id_' + thisModule.id.toString();

thisModule.id <- thisModule.id + 1;

bzp_predicat_ficticious_1.id <- 'id_' + thisModule.id.toString();

thisModule.id <- thisModule.id + 1;

bzp_predicat_ficticious_2.id <- 'id_' + thisModule.id.toString();

}

}

Seee you! 🙂

I’ve done some work on this; see this message on the m2m-atl-dev mailing list:

https://dev.eclipse.org/mhonarc/lists/m2m-atl-dev/msg00236.html

Another comment about what I noticed during my experience. It’s maybe because of the following that I answered “None” (without so much distance at the moment) about the potential issues of ATL. So my observation is the following: when I wondered “How do to this rules? It’s so tricky and complicate”, or “Grrr! Why ATL is write only on the target MM??”, the issue actually was often a meta-model issue (input or ouput) and rarely an ATL one…In other words, it was like coding with ATL enabled to think about meta-models and their design. That’s what I observed most of the time. Sometimes, I had to generate collections of class from a unique input elements using lazyRules or calledRules (instead of the distinct…foreach statements as specified in the “Iterative target pattern element” section of the ATL user guide). I found this sometimes “tricky” to code but really feasible. Finally, the idea of live coding with ATL could be very interresting, and I imagine already my models changing in real-time while I code the rules! :).

Just couple of tricks here, but which prove themselves as very useful (to me).

An easy feature to implement but very useful is the ability to see the execution call stack for choosen elements in the input model. I implemented this during my PhD for QVTo. As I were working on not so tiny model, it really helped me for transformations coding/debugging. As a complement, getting the line from where the mapping/rule was called during the execution for an element could be handy. Moreover, visualizing it directly on the transformation code with color and label with call number could be great (more appropriate for debugging activities though).

A quick OCL type evaluator in complex expression is also very convenient. We implemented this for MOFM2T transformations, and it helps a lot to quickly understand expressions at coarser-grain (as well as a ‘preview’ button which is also very helpful to quickly check the impact or your modifications).

In a more general way, I must admit that it seems to me that the big issue is the lack of real ‘prototyping/dev’ environment and ‘production’ environment with dedicated tooling. Too often, the associations you have to to with the input model(s), the output model(s), the need for configuring a special project, run-config in eclipse… all of this is pretty heavy. In ‘dev. mode’ everything should be far easier to do and once the transformations are ‘production’ ready, you can spend more time to configure/create the whole glue to integrate them.

I know this is pretty simplistic, but why all of this should be so cumbersome just to enjoy coding your transformation?