The specification and the implementation of the User Interface (UI) of a system is a key aspect of any software development project. In most cases, this UI takes the form of a Graphical User Interface (GUIs) that encompasses a number of visual components to offer rich interactions between the user and the system. But nowadays, a new generation of UIs which integrate more interaction modalities (such as chat, voice and gesture) is gaining popularity. Moreover, many of these new UIs are becoming complex software artifacts themselves, for instance, through AI-enhanced software components that enable even more natural interactions, including the possibility to use Natural Language Processing (NLP) via chatbots or voicebots. These NLP-based interfaces are commonly referred to as Conversational User Interfaces (CUIs).

Even more, many times several types of UIs are combined as part of the same application (e.g. a chatbot in a web page), what it is known as Multiexperience User Interface. These multiexperience UIs may be built together by using a Multiexperience Development Platform (MXDP). According to Gartner “MXDPs serve to centralize life cycle activities for a portfolio of multiexperience apps. Multiexperience refers to the various permutations of modalities (e.g., touch, voice and gesture), devices and apps that users interact with on their digital journey. Multiexperience development involves ensuring a consistent user experience across web, mobile, wearable, conversational and immersive touchpoints”.

According to @Gartner_inc, by 2023, more than 25% of the apps at large enterprises will be built and/or run through a multiexperience platform. See our model-based approach to create them. Click To TweetMXDP and Conversational User Interfaces

MXDP platforms need to pay special attention to CUIs. The growing popularity of all kinds of intelligent Conversational User Interfaces, and their interaction with the other interfaces of the system, is undeniable. The most relevant example is the rise of bots, which are being increasingly adopted in various domains such as e-commerce or customer service, as a direct communication channel between companies and end-users. A bot wraps a CUI as key component but complements it with a behavior specification that defines how the bot should react to a given user message. Bots are classified into different types depending on the channel employed to communicate with the user. For instance, in chatbots the user interaction is through textual messages, in voice bots is through speech, while in gesture bots is through interactive images. Note that in all cases, bots are the mechanism to implement a conversation, it just changes the medium where this conversation takes place. As such, bots always follow the similar working schema depicted in the Figure 1 used as the featured image in the heading of the post.

As you can see in that figure, the conversation capabilities of a bot are usually designed as a set of intents, where each intent represents a possible user’s goal when interacting with the bot. The bot then waits for its CUI front-end to detect a match of the user’s input text (called utterance) with one of the intents the bot implements. The matching phase may rely on external Intent Recognition Providers (e.g. DialogFlow, Amazon Lex, IBM Watson,…). When there is a match, the bot back-end executes the required behaviour, optionally calling external services for more complex responses; and finally, the bot produces a response that it is returned to the user (via text, voice or images, depending on the type of CUI). As an example, we show in Figure 2 a bot that gives the weather forecast for any city in the world. Following the working schema sketched in Figure 1, this weather bot defines several intents such as asking for a weather forecast. When a user writes (considering a chatbot) or says (considering a voicebot) “What is the weather today in Barcelona?” or “What is the forecast for today in Barcelona?”, the intent asking for weather forecast is matched and “Barcelona” is recognized as a city parameter (also called “entity”) to be used when building the response. Then, the bot calls an external service (in this case, the REST API of OpenWeather) to look up this information and give it back to the user. For a deeper introduction to bots, see this tutorial we gave at ICSE’21.

Figure 2. Screenshot of our example chatbot created with Xatkit.

Despite the increasing popularity of these new types of user interfaces, and the rich possibilities they offer when combining them with preexisting GUIs or other CUIs, we are still missing dedicated software development methods and techniques to facilitate the definition and implementation of CUIs as part of a multiexperience development project. The fragmented ecosystem of languages, libraries and APIs for building them (many times, proprietary and vendor-specific) add a new challenge to their development. Besides, their development is often completely separated from that of the rest of the system, as an ad-hoc extension. This raises significant integration, evolution and maintenance challenges for CUIs and the MXDP paradigm. Developers need to handle the coordination of the cognitive services to build multiexperience UIs, integrate them with external services, and worry about extensibility, scalability, and maintenance.

We believe a model-driven approach for MXDP could be an important first step towards facilitating the specification of rich UIs able to coordinate and collaborate to provide the best experience for end-users. Indeed, most non-trivial systems adhere to some kind of model-based philosophy, where software design models (including GUI models) are transformed into the production code the system executes at run-time. This transformation can be (semi)automated in some cases.

In our paper Towards a Model-Driven Approach for Multiexperience AI-based User Interfaces, co-authored by Elena Planas, Gwendal Daniel, Marco Brambilla and Jordi Cabot, recently published in the International Journal on Software and Systems Modeling (SoSyM) available online here (open access), we explore the application of model-driven techniques to the development of software applications embedding a multiexperience UI. Our contribution is twofold:

- We propose to raise the abstraction level used in the definition of this new breed of conversational and smart interfaces, facilitating their specification and implementation on a variety of platforms.

- We show how these CUI models can be used in conjunction with more “traditional” GUI models to combine the benefits of all these different types of interfaces in a multiexperience development project.

Modeling Conversational User Interfaces

In order to raise the abstraction level at which CUIs interfaces are defined, we present a new Domain Specific Language (DSL), that generalizes the one discussed here to cover all types of CUIs (and not just chatbots) and that follows state-machine semantics to facilitate the definition of more complex flows and behaviors in the interaction with the users.

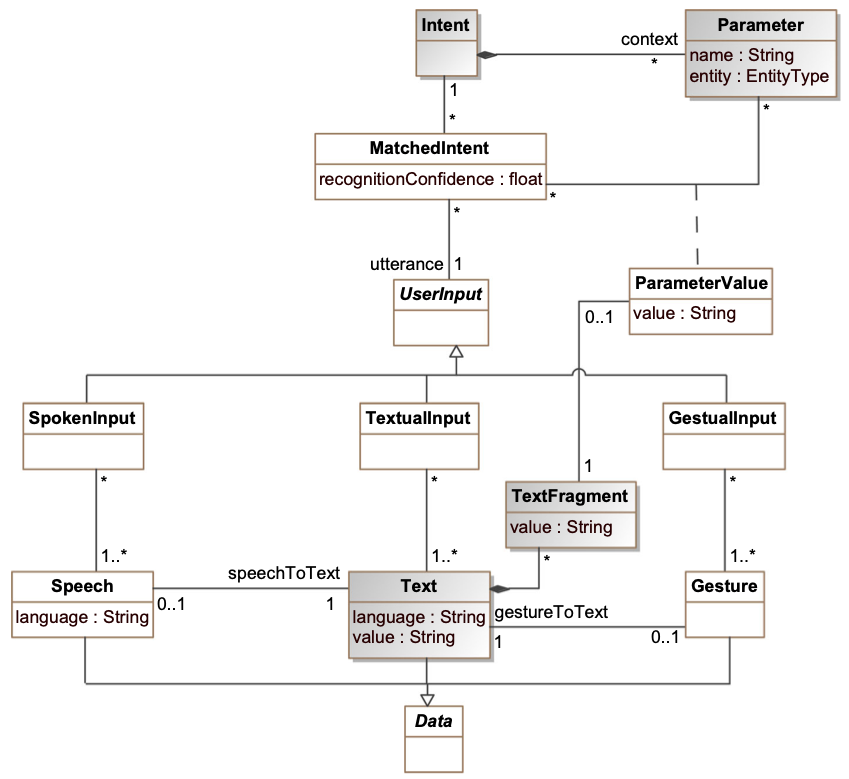

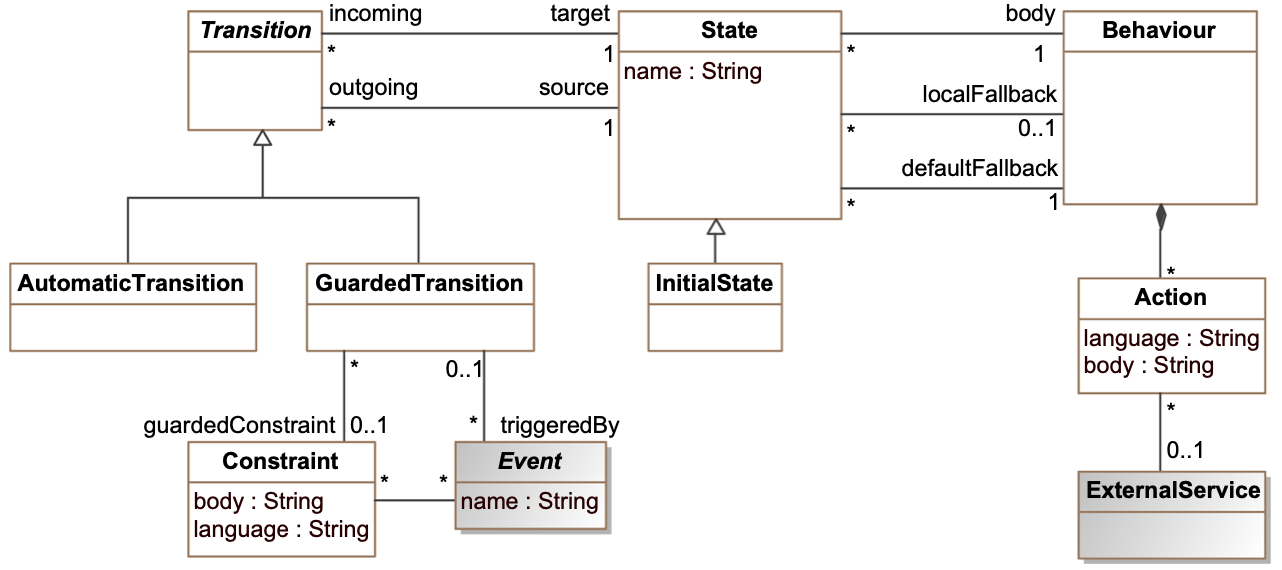

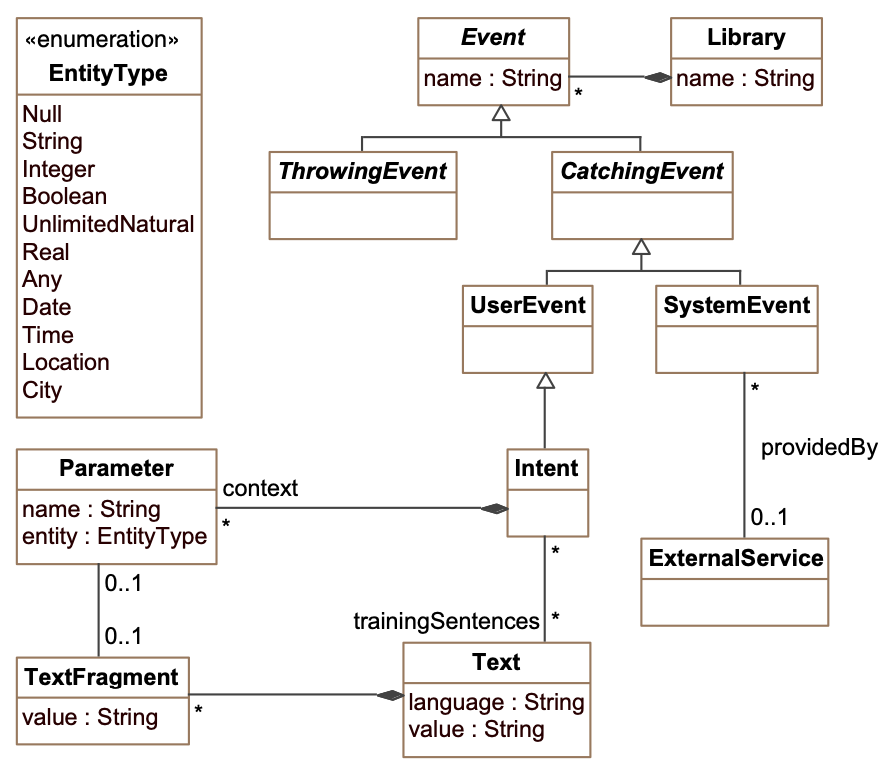

A DSL is defined through two main components: (i) an abstract syntax (metamodel) which specifies the language concepts and their relationships (in this context, generalizing the primitives provided by the major intent recognition platforms used by bots), and (ii) a concrete syntax which provides a specific (textual or graphical) representation to specify models conforming to the abstract syntax. We provide the abstract syntax of our language split into three packages in order to facilitate its readability:

- the intent package metamodel (see Figure 3), which describes the set of concepts used for modeling the intent definitions at design time;

- the behavioural package metamodel (see Figure 4), which defines the set of concepts used for modeling the execution logic of the Intent package, following the UML state-machine formalism; and

- the runtime package metamodel (see Figure 5), which defines some classes used during the runtime execution of the deployed bot.

Refer to the paper, for the full specification of this DSL and the details on how we then combine it with existing GUI metamodels, eg. with the IFML one.

Summary

Our work is the first step in a more ambitious model-driven approach for MXDP that helps software engineers to embark on this type of development project to provide a great user interface to end-users and one that goes much beyond clicking on graphical controls. But there are still plenty of research challenges and potential improvements to advance towards this vision, so stay tuned for our further developments in this area!.

I’m a lecturer at the Open University of Catalonia (UOC) and a researcher at SOM Research Lab (IN3-UOC). My research interests are mainly focused on model analysis under the Model-Driven Engineering (MDE) perspective.

Recent Comments