Sharing research artifacts is known to help academics and practitioners build upon existing knowledge, adopt novel contributions in practice, and increase the chances of papers receiving attention. In Model-Driven Engineering (MDE), openly providing research artifacts has become vital for the broader adoption of AI techniques, a goal that can only become feasible if large open datasets and confidence measures for their quality are available.

Nevertheless, a few questions may pop into people’s heads when they are creating, maintaining, or planning to share the byproducts of their research efforts:

- What is a good research artifact from the perspective of the overall MDE community?

- How can we improve the reproducibility, reusability, and repeatability of MDE research studies?

- What are the main challenges faced by MDE experts when producing and sharing research artifacts?

- What are the expectations of researchers and practitioners for a research artifact associated with a research study on MDE?

To answer these questions, Diego Damasceno (Radboud University) and Daniel Strüber (Radboud University and Chalmers | University of Gothenburg) designed a comprehensive methodology to investigate quality guidelines for MDE research artifacts. This post will briefly show this methodology and its primary outcomes. These findings were published at the 24th International Conference on Model-Driven Engineering Languages and Systems (MoDELS’21). More information about this work and its findings is available at https://mdeartifacts.github.io/.

|

Main Contribution: A guideline set formed by 84 best practices, structured along 19 factual questions, to support artifact creation and sharing in MDE research projects. This guideline can aid in addressing factual questions that researchers may inquire about an MDE artifact and scrutinize an “artifact quality” from different perspectives. |

Contents

I. INTRODUCTION

The term “reproducibility crisis” has gained traction as various research areas have reported challenges in reproducing studies [1]. Software engineering (SE) is by no means exempt from this phenomenon, as scientists also face difficulties in reusing and reproducing artifacts [2], even with the direct support of their peers [3-4]. These issues led to the creation of various initiatives, including artifact evaluation committees [5]–[9] and the ACM SIGSOFT Empirical Standards [10]. Still, the lack of discipline-specific guidelines for research data management [14] allows researchers to have conflicting subjective expectations toward artifact quality and hence, misunderstandings on the true potential of research artifacts [15].

In Model-Driven Engineering (MDE), openly providing research artifacts also plays a vital role for the following reasons:

- Despite some attempts to provide sets of models in consolidated repositories [16], [17] and collections of UML models [18], [19] and transformations [20], [21], there is still a lack of large datasets of models of diverse modeling languages and application domains [22]. Having more systematic artifact-sharing practices can aid the reuse of available models and support empirical evaluations of research tools and techniques.

- The need for consolidated artifact sharing practices in MDE research has recently become more pronounced as the community targets a broader use of artificial intelligence (AI) techniques. Thus, to benefit from the advances in machine and deep learning, MDE researchers should address the need for large open datasets and confidence measures for artifact quality.

This evidence suggests that the benefit of artifact sharing can be improved if research communities and institutions join efforts to establish common standards for data management and publishing [38]. However, this task can be difficult because researchers’ perceptions of an artifact quality may change depending on their communities, roles, and artifact types [15].

II. METHODOLOGY

To design and evaluate a guideline set for quality management of MDE research artifacts, the authors pursued an investigation based on five primary data sources:

- Existing general-purpose and discipline-independent quality guidelines of major venues in CS and SE;

- Project management literature focusing on quality management [34], [35] and the 5W2H method [35];

- MDE literature in tooling issues [32] and modeling artifact repositories [29];

- Their own experiences in the domain, including membership in artifact evaluation committees (AEC);

- And an online survey with MDE experts.

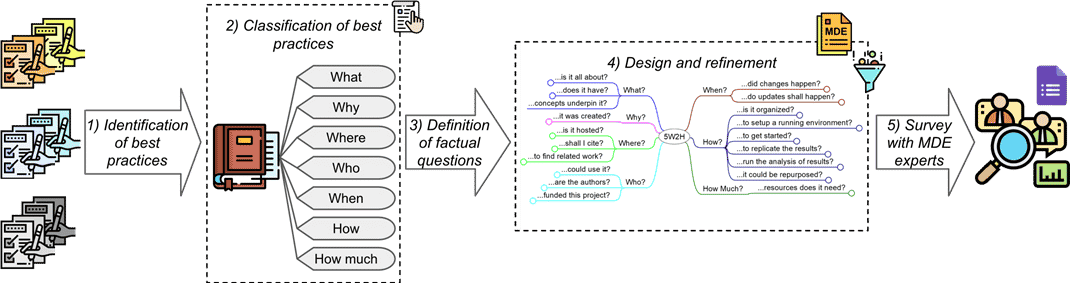

They designed a five-phase methodology based on these data sources, schematically depicted in Fig. 1 and discussed in the following sections.

Fig. 1: Designing a set of quality guidelines for MDE research artifact sharing

A. Identification of practices for artifact sharing

A set of eight guidelines for artifact sharing used by major CS and SE publishers, venues, and organizations was analyzed to identify researchers’ expectations for the quality of research artifacts. These included:

- The ACM Artifact Review and Badging [42]

- The EMSE Open Science Initiative [43]–[46]

- The Journal of Open Science Software (JOSS) [47]

- The Journal of Open Research Software (JORS) [48]

- The Guidelines by Wilson et al. [23]

- The NASA Open Source Software Projects [49]

- The TACAS artifact evaluation guideline [50]

- The CAV artifact evaluation guideline [51]

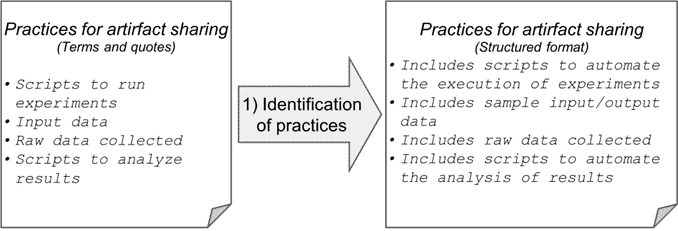

From these guidelines sets, an initial list of 284 general-purpose practices was obtained by quoting recommendations from each document and rephrasing them using a controlled vocabulary and structures. Fig. 2 shows an example of practices extracted and their refined versions.

Fig. 2: Examples of extracted and refined practices

B. Categorization of best practices according to the 5W2H

To manage the vast range of expectations for artifacts’ usage and quality criteria identified, the authors employed the 5W2H method [35] to categorize the extracted practices. Using the 5W2H method, the authors rephrase the idea of best practice for research artifact sharing as a hypothetical answer to a factual question that researchers or reviewers may ask about a given artifact. They adapted the 5W2H framework to the context of research artifacts and formulated a pattern to tag research practices using each one of the five Ws and two Hs. Table I shows examples of practices categorized accordingly and their respective labels.

|

Label |

Best practice |

|

What |

Indicates the context of the software usage |

|

Where |

Shows how to cite the project (e.g., CITATION file) |

|

When |

Explains changes (e.g., CHANGELOG, commit) |

|

Who |

Uses open/non-proprietary file formats |

|

How |

There are scripts for every stage of data processing |

|

How |

Suggests other potential applications |

|

How Much |

Provides a way to replicate the results with modest resources |

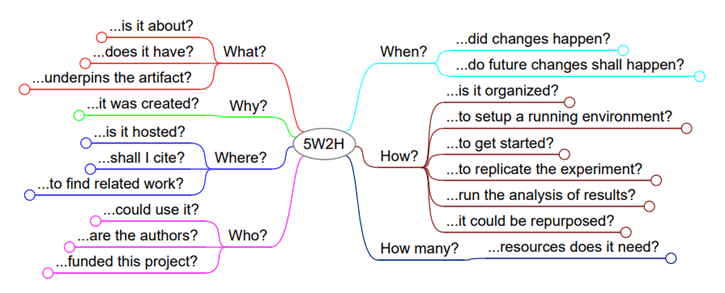

The main goal of this step was to gain insights into the types of factual questions that the extracted practices could address. This task was handled using mind mapping [35] to structure the practices into branches labeled by the 5W2H perspectives. There were three major categories, namely How, What, and Where. Precisely, these categories composed 83.28% of the initial set of practices.

During the labeling process, the following pattern was used: As part of the What perspective, they labeled practices associated with the artifact’s overall description, context, and content. As part of the Where perspective, they labeled practices associated with repository hosting, artifact citation, and related work. As part of the Why perspective, they labeled practices related to the reasoning to create an artifact, its objectives, and its main advantages. As part of the Who perspective, they labeled practices associated with usage rights, licensing, authors’ details, and funding agencies. As part of the When perspective, they marked practices related to version control and identification, updates, and plans. As part of the How perspective, they labeled practices associated with the environment setup, replications, analysis of results, and repurposing. Finally, as part of the How much perspective, they labeled practices related to quantitative information about system requirements and the time needed to run the artifact.

|

Perspective |

% |

|

What |

31.44 |

|

Where |

17.00 |

|

Why |

3.97 |

|

Who |

7.93 |

|

When |

2.83 |

|

How |

34.84 |

|

How Much |

1.98 |

C. Definition of intermediate factual questions

After labeling each practice, their associated 5W2H tags were used as a basis to propose factual questions that researchers and reviewers could eventually ask about a given research artifact. These questions were designed to: help researchers systematically think about artifact sharing concerns, provide directions to additional improvement questions, and kick off the creation of domain-specific guidelines.

The mind map below illustrates how the factual questions were associated with each 5W2H perspective.

Fig. 3: Mind map with the final set of 19 factual questions

D. Design and refinement of the MDE-specific guidelines

Getting artifacts in good shape for publishing is often perceived as complex and time-consuming [52]. The authors refactored the mind map by matching and merging similar practices to cope with time and resource constraints in research projects. After this process, they re-elaborated their guidelines as a set of 77 practices.

At this point, their guidelines included practices concerned with general types of artifacts and issues, including version control and user instructions; which also apply to MDE artifacts. However, various aspects that are important in MDE research, e.g., model semantics, syntax, technology; and aid models of higher quality [30], were still missing.

To tailor their guidelines, they analyzed a taxonomy of tool-related issues affecting the adoption of MDE in the industry [32] and a study on quality criteria for repositories of modeling artifacts [29]. Next, they produced seven extra practices covering MDE-specific concerns. The final set of guidelines, which includes 84 practices organized as answers to 19 factual questions; is available on their website [41]

E. Survey

Developing valuable research artifacts is challenging as researchers may have different expectations depending on their role and experience [15]. Thus, the authors designed a questionnaire survey for MDE experts. In this survey, they inquired about challenges encountered in reusing MDE artifacts and which practices they would suggest higher priority levels within our guidelines set.

This survey was performed between April-May 2021 among former members of the MODELS AEC and coauthors of papers (with associated artifacts) published over the last three years in the MODELS conference or Software and Systems Modeling (SoSyM) journal. Additionally, they also invited MDE community members from their personal contacts lists (e.g., Emails, Linkedin, and Twitter) and mailing lists (including PlanetMDE [53]), and their personal LinkedIn and Twitter accounts. Their recruitment activities led to a total of 90 responses. Table III shows an overview of the designed questionnaire survey.

|

Topic |

Description |

|

Demographics data |

Questions about the participants (Q1) gender and their (Q2) current primary role |

|

General experiences with artifacts |

How would you rate your experience in (Q3) artifact development and sharing and (Q4) reusing artifacts in MDE research?; (Q5) Have you ever submitted an artifact for evaluation? Have you ever (Q6) contacted other researchers or (Q7) been contacted by other researchers asking for help on reusing their artifacts? |

|

Challenges in artifact sharing |

(Q8) Which challenges have you encountered during the sharing and use of artifacts in MDE research projects? |

|

Evaluation of the Guidelines |

We asked participants to rate the (Q9-34) relevance of each one of the 84 practices and, if needed, recommend additional guidelines. |

|

Final evaluation |

How do you assess the (Q35) clarity, (Q36) completeness, and (Q37) relevance of these guidelines? Open field for (Q38) additional remarks or (Q39) providing email, if wanted to stay updated about our results. |

In this questionnaire, participants were invited to report demographic information, their general experiences with artifact sharing, and their understanding of what makes MDE artifact sharing and development a challenge. Also, participants were asked to report priority levels for each best practice as either “Essential”, “Relevant”, or “Unnecessary”. Participants also had an open text field to suggest factual questions or practices. Participants interested in receiving future information about the survey could also provide an email address for receiving updates regarding the research. The platform used to run the study was Google Forms.

III MAIN RESULTS

Based on the recruitment activities, the author gathered responses from 90 participants. Table V shows the numbers of participants who had contacted or had been contacted by another researcher. These findings suggest that the participants have meaningful collective experiences with research artifacts.

|

Made contact? |

Had been contacted? |

# |

% |

|

No |

Yes |

13 |

14.4 |

|

Yes |

No |

13 |

14.4 |

|

No |

No |

15 |

16.7 |

|

Yes |

Yes |

49 |

54.4 |

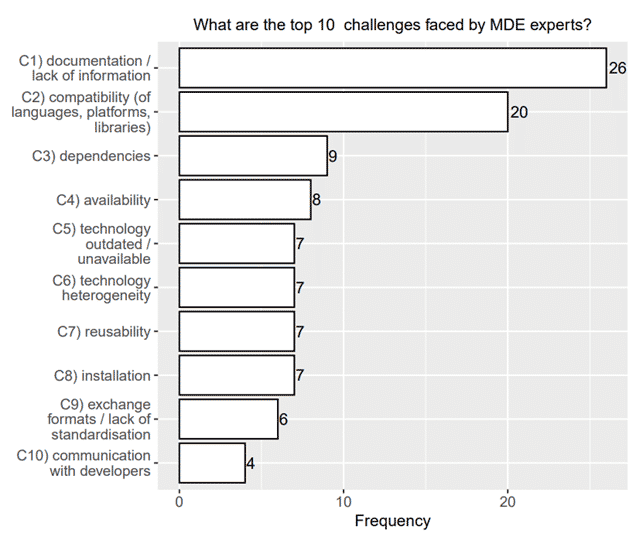

From the open-ended question, 66 responses were analyzed using an open coding scheme to classify, group, and quantify the main concern of each answer. In total, there were 28 groups of answers from which the top ten challenges are shown in Fig. 4 with their respective identifiers.

Fig. 4: Top 10 challenges faced in MDE artifact sharing

The identified challenges largely matched issues identified in a previous study [52], but there were noteworthy exceptions, such as technology heterogeneity, data exchange formats, and the lack of standardization. Also corroborating the findings by Timperley et al. [52], the lack of information and documentation about artifacts was reported as the topmost challenge faced by MDE experts. As one participant indicated: “Textual description about a model can be useful to better explain the model and mitigating doubts.” [P75]

Human comprehension is an important task that contributes to high-quality modeling artifacts [30]. Thus, to enhance researchers’ comprehension, artifact creators should provide useful information and documentation describing the context of development and relevance of the artifact to the addressed problem and its facilities. In the second place, compatibility issues were found to be another topmost challenge. To mitigate this problem, artifact authors should always provide details about the technologies and concepts that underpin the artifact. These may include the version identifiers of modeling languages, input file formats, or third-party artifacts used in the project, e.g., libraries, frameworks, and integrated development environments.

Although reporting a detailed description of an artifact may be seen as irrelevant or not worthy [52], having a good-enough set of information can improve artifact quality, reusability, and repurposing. For example, indicating the operating system and hardware context in which the artifact was developed and tested shall support setting up experimental environments.

|

Main Finding #1: MDE experts reported 28 main challenges. The two most common challenges were lack of documentation and compatibility issues between languages, platforms, and libraries. |

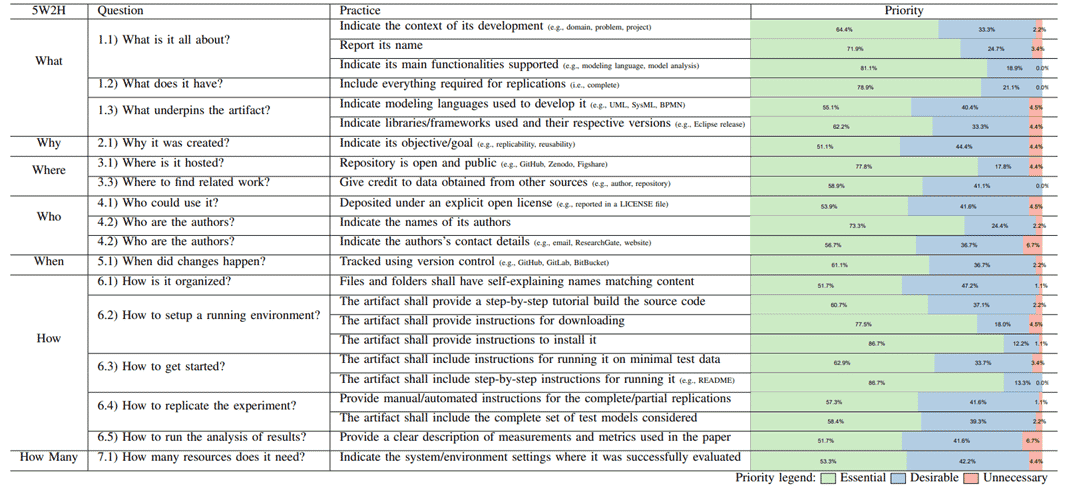

To evaluate the proposed guidelines, the authors analyzed the priorities suggested by the participants for all 84 practices. Each practice was classified in one of the three levels of priority, i.e., Essential, Desirable, and Unnecessary; adapted from the classification schema used in the ACM SIGSOFT Empirical Standards [10]. In case of doubts or interest in omitting answers, participants also had a No answer alternative. Based on the priorities assigned, a set of top priority practices was selected by picking those that had at least 50% of the participants rating it as an Essential item.

Table VII shows the 23 top priority practices. The list of practices and priorities is available in the supplementary material [39], [40]. These findings are informative for users of our guidelines, such as artifact developers and organizers of artifact evaluation processes.

TABLE VII: Practices for MDE artifact sharing: 23 top-priority practices (out of 84 in total)

|

Main Finding #2: Most participants rated the practices as at least desirable, but there was no agreement about the classification into essential vs. desirable. In the 84 practices, there is a set of 23 top-priority practices that were deemed as essential by more than half of the participants. |

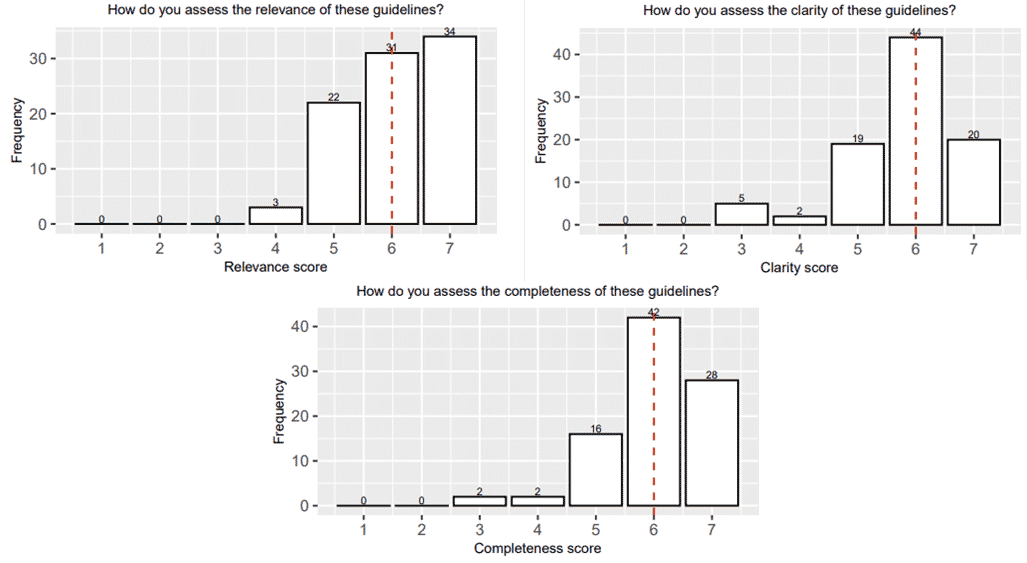

In the last part of the questionnaire, participants were asked to provide an overall score on a seven-point Likert scale to the guidelines’ completeness, clarity, and relevance. Fig. 5 shows the frequency of scores for completeness, clarity, and relevance with their respective medians indicated as a vertical dashed line.

Fig. 5: Relevance, clarity, and completeness ratings

Overall, more than 92% of the participants reported positive quality scores (between 5–7 on the seven-point Likert scale). 95.5%, 92.2%, and 96.6% of participants reported positive scores regarding completeness, clarity, and relevance. No participant said a score below three points. In the textual remarks, there were positive sentiments that mirror the positive scores, for example:

|

“Broad implementation of guidelines such as these is ESSENTIAL for advancing MDE technologies and research!” [P13] |

In the textual feedback, there were also some negative comments concerned with the guideline clarity:

|

“In terms of clarity, the questions use some abstract terms, in particular sharing. I was somehow confused by this term since sharing MDE artifacts may be associated with a research paper or not. Specially when the artifact is produced in an industrial context.” [P58] |

Overall, these findings indicate that the guideline set for MDE artifact sharing was seen as reasonably complete, relevant, and clear. However, there is still room for improvements, such as a need for practices considering particular artifacts, stakeholders, and circumstances in which artifacts are produced (e.g., industry, academia).

|

Main Finding #4: The surveyed MDE experts assess the completeness, relevance, and clarity of our guidelines largely positive, with between 92.2% and 96.6% positive scores in each dimension. We identified a number of improvement opportunities. |

IV. IMPLICATIONS FOR ARTIFACT STAKEHOLDERS

Implications for artifact authors: These guidelines have been designed as a toolkit to support researchers in creating, sharing, and maintaining artifacts in MDE research. The priority levels rank practices so that authors can focus on addressing them by relevance. Moreover, the top 10 issues reported by MDE experts can also indicate frequently encountered problems in MDE research and drive the authors’ efforts to mitigate them.

Implications for artifact evaluation organizers and reviewers: As previous surveys have shown [15], [54], the lack of consensus on quality standards and discipline-specific guidelines for RDM opens the opportunity for subjectiveness. This work complements other initiatives, such as the ACM SIGSOFT Empirical Standards, by providing domain-specific guidelines that aid MDE artifacts review. However, due to the lack of explicit agreement between participants, the current form of this guideline set is not intended to represent “The” definite list of best practices. Nevertheless, it can kick off the creation of venue-specific recommendations, quality criteria, or lists of frequently asked questions for MDE research artifacts.

Improvement opportunities: From the participants’ feedback, there is still room for improvement, such as considering privacy-preserving techniques for artifact sharing in industrial research as an extraordinary practice; and establishing viewpoint-specific practices for artifact stakeholders (e.g., users, open-source contributors, AEC reviewers, industrial practitioners/researchers).

V. CONCLUSION AND FUTURE WORK

Artifact sharing is known to be helpful for researchers and practitioners to build upon existing knowledge, adopt novel contributions in practice, and increase the chances of papers receiving citations. In MDE research, there is an urge for artifact sharing and large open datasets and confidence measures about their quality, especially with the broader interest of the MDE community in AI-based techniques. This blog post briefs the finding of a MoDELS’21 paper that introduces a set of quality guidelines specifically tailored for MDE research artifacts.

These guidelines set is formed by various MDE-specific research recommendations for artifact sharing. These practices are suggested as answers to factual questions that researchers can use to systematically think about concerns in MDE artifact sharing and provide directions to additional improvements.

In a poll among 90 MDE experts, more than 92% positively assessed these guidelines’ clarity, completeness, and relevance and reported priority levels that can support decision-making in the creation, sharing, reuse, and evaluation of MDE research artifacts. The complete set of generic practices, MDE-specific guidelines, and factual questions [39]–[41] are avaliable on the project website https://mdeartifacts.github.io/.

The authors envision several future directions for this work, such as:

- Investigate when (e.g., artifact type, development stage) and for whom (e.g., viewpoints) a practice should be essential, desirable, or unnecessary.

- Investigate whether other aspects (e.g., gender and previous experiences) may impact the priority of the guidelines.

- Experiment with the aforementioned methodology in other fields, such as software product line engineering, where there is a need for consolidated community benchmarks [62].

REFERENCES

[1] H. Pashler and E.-J. Wagenmakers, “Editors’ introduction to the special section on replicability in psychological science: A crisis of confidence?” Perspectives on Psychological Science, vol. 7, no. 6, pp. 528–530, 2012, place: US Publisher: Sage Publications.

[2] C. Collberg and T. A. Proebsting, “Repeatability in computer systems research,” Communications of the ACM, vol. 59, no. 3, pp. 62–69, Feb. 2016.

[3] J. Lung, J. Aranda, S. M. Easterbrook, and G. V. Wilson, “On the difficulty of replicating human subjects studies in software engineering,” in Proceedings of the 30th international conference on Software engineering, ser. ICSE ’08. New York, NY, USA: Association for Computing Machinery, May 2008, pp. 191–200.

[4] L. Glanz, S. Amann, M. Eichberg, M. Reif, B. Hermann, J. Lerch, and M. Mezini, “CodeMatch: obfuscation won’t conceal your repackaged app,” in Proceedings of the 2017 11th Joint Meeting on Foundations of Software Engineering, ser. ESEC/FSE 2017. New York, NY, USA: Association for Computing Machinery, Aug. 2017, pp. 638–648.

[5] ESEC/FSE, “Call for Artifact Evaluation | ESEC/FSE 2011,” 2011. [Online]. Available: http://2011.esec-fse.org/cfp-artifact-evaluation

[6] ESEC/FSE, “ESEC/FSE 2020 – Artifacts – ESEC/FSE 2020,” 2020. [Online]. Available: https://2020.esec-fse.org/track/esecfse-2020-artifacts

[7] ICSE, “ICSE 2020 – Artifact Evaluation – ICSE 2020,” 2020. [Online]. Available: https://2020.icse-conferences.org/track/icse-2020-Artifact-Evaluation

[8] SPLC, “Call for research papers – 24th ACM International Systems and Software Product Line Conference,” 2020. [Online]. Available: https://splc2020.net/call-for-papers/call-for-research-papers/

[9] MODELS, “MODELS 2020 – Artifact Evaluation – MODELS 2020,” 2020. [Online]. Available: https://conf.researchr.org/track/models-2020/

[10] P. Ralph, S. Baltes, D. Bianculli, Y. Dittrich, M. Felderer, R. Feldt, A. Filieri, C. A. Furia, D. Graziotin, P. He, R. Hoda, N. Juristo, B. Kitchenham, R. Robbes, D. Mendez, J. Molleri, D. Spinellis, M. Staron, K. Stol, D. Tamburri, M. Torchiano, C. Treude, B. Turhan, and S. Vegas, “ACM SIGSOFT Empirical Standards,” arXiv:2010.03525 [cs], Oct. 2020.

[11] V. Basili, F. Shull, and F. Lanubile, “Building knowledge through families of experiments,” IEEE Transactions on Software Engineering, vol. 25, no. 4, pp. 456–473, Jul. 1999.

[12] I. von Nostitz-Wallwitz, J. Kruger, and T. Leich, “Towards improving industrial adoption: the choice of programming languages and development¨ environments,” in Proceedings of the 5th International Workshop on Software Engineering Research and Industrial Practice, ser. SER&IP’18. New York, NY, USA: Association for Computing Machinery, May 2018, pp. 10–17.

[13] G. Colavizza, I. Hrynaszkiewicz, I. Staden, K. Whitaker, and B. McGillivray, “The citation advantage of linking publications to research data,” PLOS ONE, vol. 15, no. 4, p. e0230416, Apr. 2020, arXiv: 1907.02565.

[14] M. Grootveld, E. Leenarts, S. Jones, E. Hermans, and E. Fankhauser, “OpenAIRE and FAIR Data Expert Group survey about Horizon 2020 template for Data Management Plans,” Jan. 2018. [Online]. Available: http://doi.org/10.5281/zenodo.1120245

[15] B. Hermann, S. Winter, and J. Siegmund, “Community expectations for research artifacts and evaluation processes,” in Proceedings of the 28th ACM Joint Meeting on European Software Engineering Conference and Symposium on the Foundations of Software Engineering. New York, NY, USA: Association for Computing Machinery, Nov. 2020.

[16] R. France, J. Bieman, and B. H. Cheng, “Repository for model driven development (remodd),” in International Conference on Model Driven Engineering Languages and Systems. Springer, 2006, pp. 311–317.

[17] O. Babur, “A labeled ecore metamodel dataset for domain clustering,” 2019, type: dataset. [Online]. Available: https://zenodo.org/record/2585456

[18] G. Robles, T. Ho-Quang, R. Hebig, M. R. Chaudron, and M. A. Fernandez, “An extensive dataset of UML models in GitHub,” in 2017 IEEE/ACM 14th International Conference on Mining Software Repositories (MSR). IEEE, 2017, pp. 519–522.

[19] B. Karasneh, “An online corpus of UML design models: construction and empirical studies,” Ph.D. dissertation, Leiden University, 2016.

[20] eclipse.org, “ATL Transformation Zoo.” [Online]. Available: https://www.eclipse.org/atl/atlTransformations/

[21] D. Struber, T. Kehrer, T. Arendt, C. Pietsch, and D. Reuling, “Scalability of model transformations: Position paper and benchmark set,” in¨ BigMDE’16: Workshop on Scalability in Model-Driven Engineering, 2016, pp. 21–30.

[22] F. Basciani, J. Di Rocco, D. Di Ruscio, L. Iovino, and A. Pierantonio, “Model repositories: Will they become reality?” in CloudMDE@ MoDELS, 2015, pp. 37–42.

[23] G. Wilson, J. Bryan, K. Cranston, J. Kitzes, L. Nederbragt, and T. K. Teal, “Good enough practices in scientific computing,” PLOS Computational Biology, vol. 13, no. 6, p. e1005510, Jun. 2017, publisher: Public Library of Science.

[24] C. Wohlin, P. Runeson, M. Host, M. C. Ohlsson, B. Regnell, and A. Wessln,¨ Experimentation in Software Engineering. Springer Publishing Company, Incorporated, 2012.

[25] R. University, “Research Data management,” 2021, last Modified: 2019-06-14.

[26] S. van Eeuwijk, T. Bakker, M. Cruz, V. Sarkol, B. Vreede, B. Aben, P. Aerts, G. Coen, B. van Dijk, P. Hinrich, L. Karvovskaya, M. Keijzer-de Ruijter, J. Koster, J. Maassen, M. Roelofs, J. Rijnders, A. Schroten, L. Sesink, C. van der Togt, J. Vinju, and P. de Willigen, “Research software sustainability in the Netherlands: Current practices and recommendations,” Zenodo, Tech. Rep., Feb. 2021.

[27] L. Corti, V. Van den Eynden, L. Bishop, and M. Woollard, Managing and sharing research data: a guide to good practice, 2nd ed. Thousand Oaks, CA: SAGE Publications, 2019.

[28] M. Brambilla, J. Cabot, and M. Wimmer, Model-driven software engineering in practice, ser. Synthesis lectures on software engineering. Morgan&Claypool, 2012.

[29] F. P. Basso, C. M. L. Werner, and T. C. Oliveira, “Revisiting Criteria for Description of MDE Artifacts,” in 2017 IEEE/ACM Joint 5th International Workshop on Software Engineering for Systems-of-Systems and 11th Workshop on Distributed Software Development, Software Ecosystems and Systems-of-Systems (JSOS), May 2017, pp. 27–33.

[30] J. Krogstie, “Quality of Models,” in Model-Based Development and Evolution of Information Systems: A Quality Approach, J. Krogstie, Ed. London: Springer, 2012, pp. 205–247.

[31] D. Steinberg, F. Budinsky, M. Paternostro, and E. Merks, EMF: Eclipse Modeling Framework, 2nd Edition, 2nd ed. Addison-Wesley Professional., Dec. 2008.

[32] J. Whittle, J. Hutchinson, M. Rouncefield, H. Burden, and R. Heldal, “A taxonomy of tool-related issues affecting the adoption of model-driven engineering,” Software & Systems Modeling, vol. 16, no. 2, pp. 313–331, May 2017.

[33] R. Katz, “Challenges in Doctoral Research Project Management: A Comparative Study,” International Journal of Doctoral Studies, vol. 11, pp. 105–125, 2016.

[34] P. M. Institute, Ed., A guide to the project management body of knowledge / Project Management Institute, 6th ed., ser. PMBOK guide. Newtown Square, PA: Project Management Institute, 2017.

[35] N. R. Tague, The quality toolbox, 2nd ed. Milwaukee, Wis: ASQ Quality Press, 2005.

[36] Z. Pan and G. M. Kosicki, “Framing analysis: An approach to news discourse,” Political Communication, vol. 10, no. 1, pp. 55–75, Jan. 1993.

[37] G. Hart, “The five Ws: An old tool for the new task of audience analysis,” Technical Communication, vol. 43, no. 2, pp. 139–145, 1996. [Online]. Available: http://www.geoff-hart.com/articles/1995-1998/five-w.htm

[38] The Royal Society, “Science As an Open Enterprise,” Royal Society, London, Tech. Rep., 2012. [Online]. Available: https://royalsociety.org/topics-policy/projects/science-public-enterprise/report/

[39] C. D. N. Damasceno and D. Struber,¨ “damascenodiego/mdeartifacts.github.io: Artifacts for this paper,” Jul. 2021. [Online]. Available: https://doi.org/10.5281/zenodo.5109401

[40] ——, “damascenodiego/mdeartifacts.github.io,” Jul. 2021. [Online]. Available: https://github.com/damascenodiego/mdeartifacts.github.io

[41] ——, “The MDE Artifacts project,” 2021. [Online]. Available: https://mdeartifacts.github.io/

[42] ACM, “Artifact Review and Badging – Current,” Aug. 2020. [Online]. Available: https://www.acm.org/publications/policies/artifact-review-and-badging-current

[43] D. Mendez Fernández, M. Monperrus, R. Feldt, and T. Zimmermann, “The open science initiative of the Empirical Software Engineering journal,”´ Empirical Software Engineering, vol. 24, no. 3, pp. 1057–1060, Jun. 2019.

[44] M. Monperrus, “How to make a good open-science repository?” Dec. 2019, section: Updates in Data. [Online]. Available: https://researchdata.springernature.com/posts/57389-how-to-make-a-good-open-science-repository

[45] EMSE, “EMSE Open Science Initiative,” Mar. 2021, original-date: 2018-06-28T15:35:56Z. [Online]. Available: https://github.com/emsejournal/openscience/blob/master/README.md

[46] EMSE, “EMSE Open science – Evaluation Criteria,” Mar. 2021. [Online]. Available: https://github.com/emsejournal/openscience/blob/master/review-criteria.md

[47] D. S. Katz, K. E. Niemeyer, and A. M. Smith, “Publish your software: Introducing the Journal of Open Source Software (JOSS),” Computing in Science Engineering, vol. 20, no. 3, pp. 84–88, May 2018, conference Name: Computing in Science Engineering.

[48] JORS, “The Journal of Open Research Software – Editorial Policies,” Feb. 2021. [Online]. Available: http://openresearchsoftware.metajnl.com/about/editorialpolicies/

[49] NASA, “NASA Open Source Software,” 2021. [Online]. Available: https://code.nasa.gov/

[50] TACAS, “TACAS 2019 – ETAPS 2019,” 2019. [Online]. Available: https://conf.researchr.org/track/etaps-2019/tacas-2019-papers#Artifact-Evaluation

[51] CAV, “Artifacts | CAV 2019,” 2019. [Online]. Available: http://i-cav.org/2019/artifacts/

[52] C. S. Timperley, L. Herckis, C. Le Goues, and M. Hilton, “Understanding and improving artifact sharing in software engineering research,” Empirical Software Engineering, vol. 26, no. 4, p. 67, 2021.

[53] PlanetMDE. planetmde – model driven engineering ANNOUNCEMENTS – info. [Online]. Available: https://listes.univ-grenoble-alpes.fr/sympa/info/planetmde

[54] R. Heumuller, S. Nielebock, J. Krüger, and F. Ortmeier, “Publish or perish, but do not forget your software artifacts,”¨ Empirical Software Engineering, vol. 25, no. 6, pp. 4585–4616, Nov. 2020.

[55] M. Jasper, M. Mues, A. Murtovi, M. Schluter, F. Howar, B. Steffen, M. Schordan, D. Hendriks, R. Schiffelers, H. Kuppens, and F. W. Vaandrager, “Rers¨ 2019: Combining synthesis with real-world models,” in Tools and Algorithms for the Construction and Analysis of Systems, D. Beyer, M. Huisman, F. Kordon, and B. Steffen, Eds. Cham: Springer International Publishing, 2019, pp. 101–115.

[56] M. C. Murphy, A. F. Mejia, J. Mejia, X. Yan, S. Cheryan, N. Dasgupta, M. Destin, S. A. Fryberg, J. A. Garcia, E. L. Haines, J. M. Harackiewicz, A. Ledgerwood, C. A. Moss-Racusin, L. E. Park, S. P. Perry, K. A. Ratliff, A. Rattan, D. T. Sanchez, K. Savani, D. Sekaquaptewa, J. L. Smith, V. J. Taylor, D. B. Thoman, D. A. Wout, P. L. Mabry, S. Ressl, A. B. Diekman, and F. Pestilli, “Open science, communal culture, and women’s participation in the movement to improve science,” Proceedings of the National Academy of Sciences, vol. 117, no. 39, pp. 24154–24164, Sep. 2020.

[57] Wikipedia, “Social-desirability bias,” 2020, page Version ID: 992112847. [Online]. Available: https://en.wikipedia.org/w/index.php?title= Social-desirability bias&oldid=992112847

[58] C. Jia, Y. Cai, Y. T. Yu, and T. H. Tse, “5W+1H pattern: A perspective of systematic mapping studies and a case study on cloud software testing,” Journal of Systems and Software, vol. 116, pp. 206–219, Jun. 2016.

[59] G. A. A. Prana, C. Treude, F. Thung, T. Atapattu, and D. Lo, “Categorizing the Content of GitHub README Files,” Empirical Software Engineering, vol. 24, no. 3, pp. 1296–1327, Jun. 2019.

[60] J. Zhang, K. Li, C. Yao, and Y. Sun, “Event-based summarization method for scientific literature,” Personal and Ubiquitous Computing, Apr. 2020.

[61] L. Perrier, E. Blondal, A. P. Ayala, D. Dearborn, T. Kenny, D. Lightfoot, R. Reka, M. Thuna, L. Trimble, and H. MacDonald, “Research data management in academic institutions: A scoping review,” PLOS ONE, vol. 12, no. 5, p. e0178261, May 2017, publisher: Public Library of Science.

[62] D. Struber, M. Mukelabai, J. Krüger, S. Fischer, L. Linsbauer, J. Martinez, and T. Berger, “Facing the truth: benchmarking the techniques for the evolution¨ of variant-rich systems,” in SPLC’19: International Systems and Software Product Line Conference. ACM, 2019, pp. 26:1–10.

Recent Comments