Domain specific languages (DSLs) are widely used in practice and investigated in software engineering research. But so far, language workbenches do not provide sufficient built-in decision support for language design and improvement.

This article presents an integrated end-to-end tool environment to perform controlled experiments in DSL engineering. Controlled experiments have the potential to provide appropriate, data-driven decision support for language engineers and researchers to compare different language features like used keywords, layout of the editor or language recommendations with evidence-based feedback similar to A/B testing.

Our experiment environment is integrated into the language workbench Meta Programming System (MPS) [1]. The environment not only supports language design but also all steps of to experiment with the designed language, i.e., planning, operation, analysis & interpretation, as well as presentation & package.

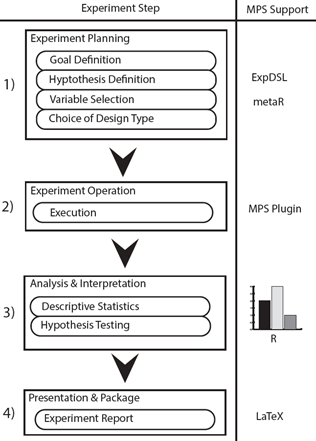

In this article, which is based on two previously published papers [2,3], we provide an overview of the implementation of the experimentation steps in our environment. As show in Figure 1 each “classical” experimentation step is supported within MPS either by providing specific DSLs for that task or via implemented plugins.

Figure 1: Steps of the experimentation process and tool support

For experiment planning we implemented the experiment definition language ExpDSL proposed by Freire et al. in MPS [4]. Furthermore, we integrate the DSL MetaR proposed by Campagne [5] as it simplifies data analysis with R and makes it feasible even for language engineers with limited experience in statistics. For running the experiment, we developed a plugin to provide monitoring support to collect runtime metrics when language users apply a specific deployed DSL. For analysis & interpretation, we apply metaR (and the corresponding R code). Finally, for presentation and reporting, we apply a DSL for LaTeX provided by Völter [6]. This code can be used to automatically create reports and pre-filled LaTeX paper templates including R plots, from which also PDF reports can be generated.

Controlled experiments for DSL design: a running example

In the following, we present more details on each step based on a running example, where two versions of a DSL for acceptance testing are compared. For sure, the basis to perform experiments is that the language engineer or researcher created at least two DSLs to conduct an experiment for it with regard to a specific property like time needed to create a language instance, document length etc.

Abstract This paper presents a controlled experiment.

Goals

G1: Analyze the efficiency of similar test DSLs

for the purpose of evaluation

with respect to creation time

from the point of view of a DSL user

in the context of graduate students using

assisting editors for test case creation.

Hypotheses

H0: The time to create tests with both DSLs is equal

H1: The time to create tests differs significantly |

Figure 2: Architecture of the integrated DSL experimentation environment

Experiment Planning

The language ExpDSL allows language engineers or researchers to formulate experiment goals, hypotheses, independent and dependent variables as well as metrics in an intuitive way.

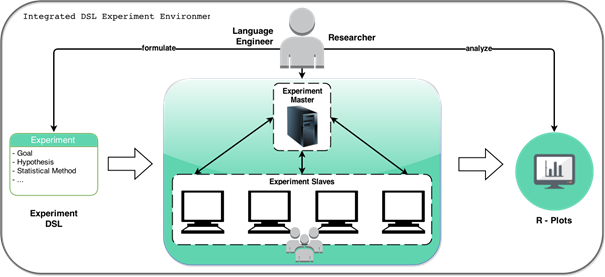

In the following example, we formulate an experiment to compare the efficiency of two DSLs in terms of the time it takes to instantiate them to model a concrete problem. In the example shown in Figure 2, a goal following the desired goal template of ExpDSL as well as a null (“The time to create tests with both DSLs is equal”) and alternative hypothesis (“The time to create tests differs significantly”) based on the independent (i.e., the DSL type in our case) and dependent (i.e., the time needed to create a language instance) variables is described.

Experiment Operation

In our tool environment MPS is not only used to design and apply specific DSLs, but also as an execution platform for experiment operation.

MPS can either operate in master or client mode. The architecture of the environment is shown in Figure 3. When starting an actual experiment the language instances are deployed to the clients. When working with the DSL instance assigned to a specific language user, metrics like the number of deletions or the time needed to complete a specific task via our MPS plugin are collected. The experiment master, who distributes the tasks to the clients, groups the metrics. After the experiment is finished, the metrics are processed according to the analysis procedure and graphs are created, which are embedded into reports.

Figure 3: Architecture of the integrated DSL experimentation environment

Analysis & Interpretation

In the analysis and interpretation phase, descriptive and inferential statistical analyses are performed.

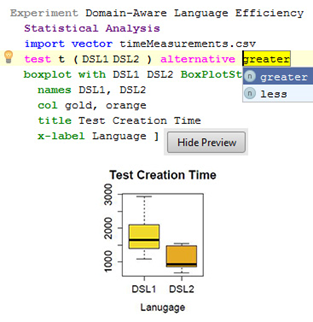

In our case (see Figure 4) a t-test is applied (test t ( DSL1 DSL2 ) alternative greater) to test with statistical significance whether the creation time of a language instance with DSL1 differs significantly from that of DSL2. Furthermore, a boxplot (boxplot with DSL1 DSL2) is defined and created in MPS.

The data analysis is based on the runtime data stored in the file timeMeasurement.csv that is imported via the command import vector. The language editor also provides completion support as shown for the type of one-tailed t-test (greater, less) in Figure 4.

Figure 4: Screenshot of the editor prototype

Presentation & Package

Based on the results of the conducted experiment, reports can automatically be generated. The environment is capable to automatically generate PDF reports from pre-filled LaTeX templates based on the reporting recommendations of Wohlin et al. [7] which contains all relevant information of the experiment.

The integrated tool environment for experimentation in DSL engineering is still ongoing work. At the moment we are in the process of migrating the tool environment to Xtext [8] to better integrate it with other related environments like Collaboro [9], which allows crowdsourced analysis of DSLs. In addition, we also extend the set of features to for instance allow the integration of questionnaires that have to be answered by the language users and provide additional data to compare various DSL types.

References

- MPS, available online at https://www.jetbrains.com/mps/

- Häser, M. Felderer, R. Breu: Is business domain language support beneficial for creating test case specifications: A controlled experiment. Information and Software Technology, 79, 2016, 52-62, https://doi.org/10.1016/j.infsof.2016.07.001

- Häser, M. Felderer, R. Breu: An integrated tool environment for experimentation in domain specific language engineering. Proceedings of the 20th International Conference on Evaluation and Assessment in Software Engineering. ACM, 2016, https://doi.org/10.1145/2915970.2916010

- Freire, U. Kulesza, E. Aranha, G. Nery, D. Costa, A. Jedlitschka, E. Campos, S. T. Acuna, M. N. Gomez: Assessing and evolving a domain specific language for formalizing software engineering experiments: An empirical study. International Journal of Software Engineering and Knowledge Engineering, 24(10):1509{1531, 2014, http://www.worldscientific.com/doi/abs/10.1142/S0218194014400178 .

- Campagne. MetaR: A DSL for statistical analysis. Campagne Laboratory, 2015, available online at http://campagnelab.org/software/metar/

- Völter: Preliminary experience of using mbeddr for developing embedded software. In Tagungsband des Dagstuhl-Workshops, 2014, http://mbeddr.com/files/mbees2014.pdf

- Wohlin, P. Runeson, M. Höst, M. C. Ohlsson, B. Regnell, A. Wesslén: Experimentation in software engineering. Springer, 2012, http://www.springer.com/de/book/9783642290435

- Xtext, available online at https://eclipse.org/Xtext/

- L. C. Izquierdo, J. Cabot: Collaboro: a collaborative (meta) modeling tool.PeerJ Computer Science, 2, e84, 2016, available online at https://peerj.com/articles/cs-84/

Prof. Dr. Michael Felderer is an assistant professor at the Institute of Computer Science at the University of Innsbruck, Austria. He holds a PhD as well as a habilitation degree in computer science. His research interests are in the areas of software and security engineering with a focus on software testing, security testing, model engineering, software processes, requirements engineering, software analytics and empirical software engineering. Dr. Felderer works in close collaboration with industry and transfers his research results into practice as a consultant and speaker on industrial conferences.

Recent Comments