Domain experts often use visual languages to create models that capture and represent software systems. Visual modeling workbenches commonly provide features such as copy & paste, drag and drop, zoom and automatic element arrangement, among others. In recent years, we have witnessed an exponential growth of software and technologies for human-machine interfaces, such as sensitive surfaces, haptic devices, voice recognition and synthesis, gestural detection, image recognition, etc. In addition, technologies such as Virtual (VR) or Augmented Reality (AR) are enabling users to immerse in digital worlds that replace the real one or enrich the actual views of physical environments with virtual elements. These environments can be combined with human-machine interactions to provide more realistic, Extended Reality scenarios.

May these kinds of environments be useful to model using Domain-Specific Languages (DSLs)? With the aim of exploring alternative model manipulation mechanisms, beyond traditional visual or textual support tools, we have created a proof-of-concept app and defined a framework to design model editors based on Augmented Reality.

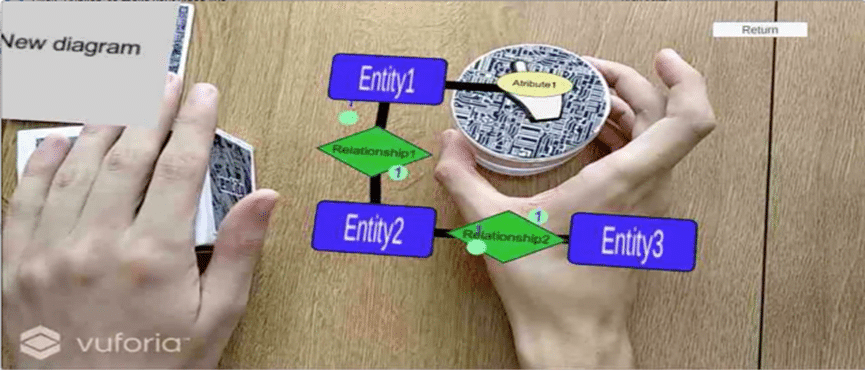

We have developed an Android mobile application to edit Entity-Relationship (ER) models using AR. The tool is capable of producing SQL database definition scripts from an ER model. For its development, we used the Unity engine and the Vuforia AR framework. A video of the running tool is available online.

This app, like any other modeling tool, allows users to open, create and edit models, as well as export and import models in XML files. However, unlike traditional visual modeling tools, the app does not provide a canvas for drawing, because any real surface can become a modeling area. There is no toolbox with buttons on the screen. Instead, the operations on the model are executed when the camera of the device recognizes and tracks a set of printed markers. Under the hood, the nodes and edges from the ER model are managed with GameObjects, which are Unity’s object containers representing the visual elements. During the app runtime, several C# scripts detect collisions among virtual elements to validate the user model rules and create links among entities, relationships and attributes.

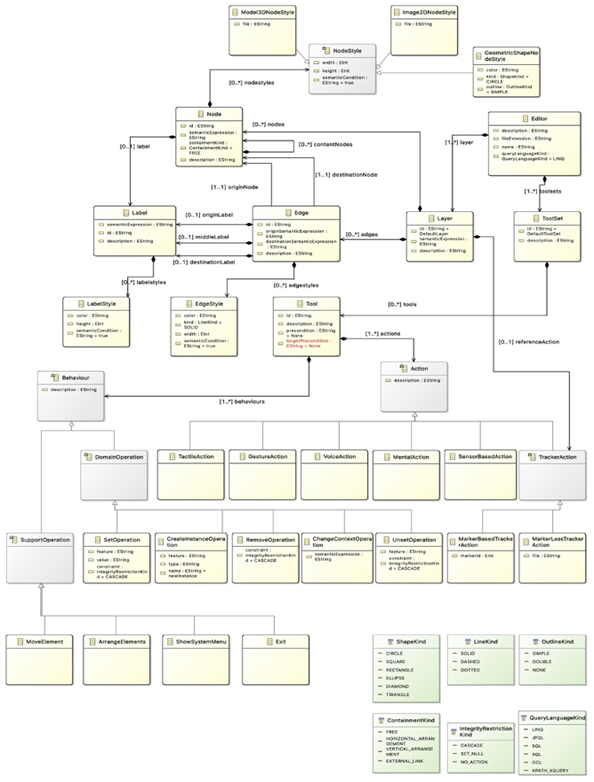

Besides, we have designed a framework based on a model-driven development strategy to generate AR-model editors for working with domain languages. The required steps are similar to the traditional ones required to create modeling tools in the Eclipse ecosystem, such as Sirius. Firstly, the abstract syntax of the DSL must be defined using the Ecore language. Secondly, the concrete syntax will be designed through a new metamodel aimed at defining how the DSL elements are going to be represented in the AR scene. We provide a set of EMF forms to model the AR editors with the underlying metamodel. Then, DSL developers can automatically generate the support tool for the DSL, customize the generated code and even extend it to incorporate the DSL semantics definition, via model interpretation or code generation. Finally, the whole source code has to be compiled and built into the target platform.

Augmented Reality Editor metamodel

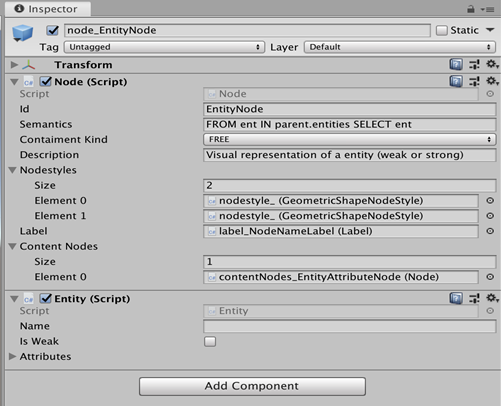

To perform code generation, we provide an extension for the Unity IDE. The extension generates C# scripts for both the DSL abstract syntax elements and the AR Editor metamodel elements. This step is internally performed through a Java Acceleo program. In addition, it populates the project’s main scene with GameObjects (fig. 3), according to the nodes, edges and the tool set defined in the concrete syntax. Finally, all of these objects are saved as Unity prefabs to further create new instances in the scenes. However, the code generation is limited, and it requires further development.

Unity inspector showing properties of the visual representation of a DSL metaclass

The approach and the evaluation conducted is explained in the paper titled “Model-driven development of augmented reality-based editors for domain-specific languages” by Iván Ruiz, Rubén Baena, José Miguel Mota, and Inmaculada Arnedillo. You can download the full paper from the Interaction Design and Architecture(s) Journal website. The initial mobile app was developed by Pablo Mariscal. The source code and resources are available at Github.

This work is only a first step in the development of DSL tools based on extended reality, so further research and development are required.

Assistant Professor at University of Cádiz (Spain). Member of the Software Process Improvement and Formal Methods (SPI-FM) research group. My research fields are Technology-Enhanced Learning and Software Process Improvement. I am interested in end-user development tools based on visual languages, model-driven techniques, and Java full-stack technologies, among others.

Recent Comments